You've been using ChatGPT to draft that important email, haven't you? Your personal account—the one you signed up for 6-month ago. Maybe you pasted in confidential project details to get the tone right. Or uploaded meeting notes to create better summaries. Perhaps you fed it customer conversations to craft more persuasive responses. It felt productive. It felt harmless. After all, you're just trying to do your job better.

Here's what you might not realize: Every piece of business data you've shared with your personal AI account now lives on systems you don't control, governed by terms you probably didn't read, accessible in ways you might not imagine. That competitive strategy you refined? It's in your conversation history. Those customer insights? Stored on third-party servers. That financial model? Sitting in an account that has nothing to do with your company's security policies.

You're not alone. Across mid-market companies, this same pattern is playing out thousands of times daily. Marketing teams using personal Claude accounts to analyze proprietary campaigns. Sales directors feeding deal data into ChatGPT to craft proposals. Finance teams uploading sensitive spreadsheets to Gemini for analysis. HR managers drafting performance reviews with AI assistance using their personal accounts. None of them intending to create security risks. All of them inadvertently building what security professionals call "shadow AI"—the unauthorized use of AI tools outside organizational governance.

The problem isn't AI itself. AI genuinely makes work better, faster, more effective. The problem is the gap between how employees are using AI and how organizations are governing it. While companies debate AI strategy and pilot programs, their employees have already decided: they're using personal AI accounts to get work done, and they're sharing sensitive business information to do it.

This isn't malicious. It's inevitable. When powerful tools are freely available and genuinely useful, people will use them—especially when their organizations haven't provided alternatives. But what works for personal productivity creates enormous problems for organizational security, compliance, and competitive positioning. Every conversation with a personal AI account is a potential data leak. Every prompt containing customer information is a compliance violation waiting to happen. Every strategic discussion saved in a personal chat history is intellectual property sitting outside your security perimeter.

If you've used personal AI accounts for work—and statistically, you probably have—this isn't about guilt. It's about understanding the risks you didn't know you were taking and the solutions that can let you keep using AI productively without creating liabilities your company can't afford.

The Hidden Costs of Personal AI Accounts in Business

When you use personal AI accounts for work, you're making implicit assumptions about data handling that don't match reality. Most people think of AI conversations as private, temporary, and inconsequential—like thinking out loud. But personal AI accounts aren't thinking out loud. They're permanent records stored on third-party systems with terms of service most users never read and data handling practices they don't understand.

The first cost is intellectual property exposure. Personal AI accounts weren't designed for business use, which means they lack the data isolation and ownership guarantees that enterprise software provides. When you use a personal ChatGPT, Claude, or Gemini account, you're subject to consumer terms of service, not business agreements. While major AI providers have improved their data practices—many no longer train on personal account conversations—your data still resides on their systems, subject to their retention policies, their security practices, and potentially their legal obligations to disclose information.

Consider what happens when you use your personal AI account to refine a competitive analysis. You upload market research, customer feedback, competitor pricing, and strategic recommendations. Even if the AI provider doesn't train on this data, it now exists as a record on their systems. If you leave your company and join a competitor, you still have access to that personal account with all those conversations. The intellectual property your company paid to develop is now accessible to your competitor through a personal login they never knew existed.

The second cost is compliance risk. Every industry with data protection requirements—healthcare (HIPAA), finance (GLBA, SOX), education (FERPA), government contracting (CMMC)—has specific requirements about how sensitive data is handled, where it's stored, and who can access it. Personal AI accounts don't meet these requirements. They're consumer products designed for general use, not regulated business processes.

When your healthcare administrator uses a personal AI account to draft patient communication templates and includes real patient scenarios as examples, that's a HIPAA violation. When your finance team member uploads transaction data to analyze fraud patterns using a personal account, that's a regulatory compliance failure. When your government contractor feeds classified information into a personal AI chatbot to help write proposals, that's a security breach that could cost your contract and your clearance.

The compliance risk isn't theoretical. Regulatory bodies are beginning to scrutinize AI use, and they're finding that many organizations can't account for where their sensitive data went. When regulators audit your data handling practices, "we told employees not to use personal AI accounts" isn't a defense—especially when your conversation logs and file uploads prove they did anyway.

The third cost is the lack of audit trails and governance. In business contexts, you need to know who accessed what data, when, and for what purpose. This isn't paranoia; it's basic information security. When something goes wrong—a data breach, a compliance investigation, a legal discovery request—you need forensic evidence of what happened.

Personal AI accounts don't provide this. You can't monitor what your employees are sharing with their personal ChatGPT or Claude accounts. You can't audit their conversation histories. You can't enforce data handling policies or restrict what information gets uploaded. You don't even know the accounts exist unless employees voluntarily disclose them, which they rarely do because they don't realize they're creating problems.

This blind spot in your security posture creates existential risks. You can't protect data you don't know is being shared. You can't respond to incidents you don't know occurred. You can't demonstrate compliance with requirements you can't audit. The shadow AI problem isn't just about current risks—it's about discovering months or years later that your data has been exposed in ways you never knew were happening.

The fourth cost is fragmented organizational learning. When employees use personal AI accounts, their discoveries, techniques, and insights remain personal. The prompt engineering strategies that work for generating customer emails, the approaches that improve data analysis, the methods that accelerate research—all of these stay trapped in individual workflows, inaccessible to colleagues who could benefit from them.

This fragmentation is particularly costly for mid-market companies that need every employee working at peak efficiency. When one person discovers that AI can reduce proposal writing time by 60%, but that knowledge never spreads beyond their personal use, the organization loses massive potential productivity gains. When another person develops effective techniques for using AI in customer service, but those techniques remain locked in their personal account's chat history, the company pays the learning curve cost multiple times over.

Personal AI accounts create knowledge silos that organizational AI platforms solve. But until organizations provide legitimate alternatives, employees will continue building their expertise in isolated personal accounts, and companies will continue paying the cost of fragmented learning.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

The Data Security Reality: What Actually Happens to Your Business Data

Understanding the shadow AI risk requires understanding what actually happens when you use personal AI accounts for work. The specifics vary by provider and account type, but the general pattern holds: personal AI accounts prioritize user convenience over organizational control, which creates data handling practices incompatible with business security requirements.

When you upload a document to a personal AI account—say, a confidential client proposal—that document gets processed by the AI provider's systems. The document content is analyzed, stored for conversation context, and retained according to the provider's data retention policies. Most major providers now commit to not training AI models on personal account data, which is an improvement from earlier practices. But "not training on your data" isn't the same as "not storing your data" or "giving you control over your data."

The document remains accessible in your conversation history, typically indefinitely unless you manually delete it. If your account is compromised—through phishing, credential stuffing, or any other attack vector—that client proposal is now accessible to unauthorized parties. If you leave your company and retain access to your personal account (which you will, because it's personal), you retain access to all those business documents and conversations.

But the data security concerns go beyond retention and access. They extend to jurisdiction and legal obligations. Where is your data stored? Personal AI providers typically use cloud infrastructure across multiple geographic regions. Your data might physically reside in data centers your organization would never approve for business data storage. If you're subject to data residency requirements—common in EU markets, financial services, and government contracting—personal AI accounts almost certainly violate them.

What legal obligations does the AI provider have regarding your data? Personal AI accounts are governed by consumer terms of service, which typically give providers broad rights to access customer data for various purposes: system maintenance, security investigations, legal compliance, and more. If the provider receives a subpoena for user data, they're obligated to respond, and your business information is subject to disclosure without your knowledge or consent.

Consider a practical scenario: your HR manager uses a personal AI account to draft performance review templates, including anonymized (she thinks) examples from actual reviews to make the templates more realistic. Three months later, a former employee files a discrimination lawsuit. During legal discovery, the plaintiff's attorney subpoenas the AI provider for any data related to the former employee's name. The provider searches their systems, finds the "anonymized" examples in your HR manager's conversation history, and produces them as evidence. You've just disclosed internal personnel documents you never intended to share, through a system you never authorized, in a legal proceeding you thought was under control.

This isn't hypothetical fear-mongering. It's the logical consequence of using consumer products for business processes without proper governance.

The Compliance Nightmare: When Good Intentions Meet Regulatory Reality

Every regulated industry faces a version of this problem, but healthcare, finance, and education face particularly acute risks because their regulations explicitly require specific data handling practices that personal AI accounts cannot meet.

Healthcare organizations subject to HIPAA must ensure that any system processing Protected Health Information (PHI) has proper Business Associate Agreements (BAAs) in place. Personal AI accounts don't have BAAs. They're consumer products that explicitly disclaim HIPAA compliance. When healthcare workers use personal AI accounts to help with patient communication, appointment scheduling, or clinical documentation—even with de-identified information—they're creating HIPAA violations.

The compliance failure isn't always obvious to employees. A nurse using ChatGPT to help write patient education materials might include realistic patient scenarios to make the AI's output more relevant. She's not putting in patient names or medical record numbers—she knows that would be wrong. But HIPAA's definition of PHI is broad, and combinations of age, gender, diagnosis, and treatment details can be individually identifying even without explicit identifiers. The nurse thinks she's being careful. She's actually creating compliance violations that could result in significant fines and required breach notifications.

Financial services face similar issues with GLBA, SOX, and various state financial privacy laws. When financial advisors use personal AI accounts to analyze client portfolios, create investment recommendations, or draft financial plans, they're potentially violating multiple regulatory requirements around data protection, record retention, and client privacy. The fact that they're using AI to do their jobs better doesn't change the regulatory requirement to maintain proper data controls.

Educational institutions subject to FERPA face parallel problems. When teachers use personal AI accounts to help write student feedback, generate quiz questions based on class performance patterns, or analyze learning outcomes, they're potentially disclosing education records in violation of federal law—even if they think they're anonymizing the data.

The compliance nightmare extends beyond industry-specific regulations to general data protection laws like GDPR, CCPA, and their international equivalents. These laws establish specific requirements for data processing, including purpose limitation (only using data for specified purposes), data minimization (only collecting necessary data), and data subject rights (individuals' rights to access and delete their data).

When employees use personal AI accounts for work, they're potentially violating all of these principles. They're using customer or employee data for purposes beyond what was originally disclosed. They're sharing more data than necessary by including contextual information to make AI outputs more relevant. And they're creating data copies that exist outside your organization's data governance processes, making it impossible to respond properly to data subject access or deletion requests.

The regulatory risk landscape is evolving rapidly as governments recognize AI's data handling implications. New regulations specifically addressing AI use are emerging in the EU (AI Act), various US states, and other jurisdictions worldwide. Organizations that haven't established proper governance over AI use—including shadow AI through personal accounts—will find themselves on the wrong side of these regulations, facing not just fines but also mandatory compliance programs and restrictions on AI use that could severely limit their competitive capabilities.

The Strategic Risk: When Your Competitive Intelligence Becomes Common Knowledge

Beyond immediate security and compliance concerns, personal AI account use creates strategic risks that may not manifest for months or years but can be devastating when they do. These risks center on competitive intelligence leakage—the gradual dissemination of your strategic thinking, market insights, and competitive advantages through fragmented AI conversations that no one is tracking or protecting.

Strategy development is inherently conversational. Executives and strategists think out loud, test ideas, refine approaches, and explore scenarios. AI tools are extraordinarily useful for this work—they're always available, never judgmental, and excellent at playing devil's advocate or generating alternatives. It's natural that strategic leaders gravitate toward using AI for strategic thinking. But when they use personal accounts, every strategic conversation creates a permanent record outside your organization's control.

Consider how strategy develops in practice. Your VP of Product uses a personal Claude account to explore potential product directions, uploading competitive analysis, customer research, and market sizing models. Your VP of Sales uses ChatGPT to refine go-to-market strategies, including territory analysis, pricing strategies, and competitive positioning. Your CEO uses Gemini to work through M&A scenarios, including target company analysis, valuation approaches, and integration planning.

Each of these conversations represents strategic intelligence you've invested heavily to develop. Market research costs money. Competitive analysis requires expertise. Strategic frameworks take time to build and refine. All of this intellectual capital is now distributed across personal AI accounts that you can't monitor, can't protect, and can't control if employees leave or if accounts are compromised.

The strategic risk compounds when employees move between companies. Unlike internal documents that remain on company systems when employees leave, personal AI account conversations stay with the employee. When your VP of Product joins a competitor, they take their personal Claude account with them—including all those strategic conversations about your future product direction. When your VP of Sales moves to a new company, their ChatGPT conversation history moves with them, complete with detailed analysis of your go-to-market strategies.

This isn't necessarily intentional theft. Former employees might not even remember what they discussed in their AI conversations months ago. But the information persists, searchable and accessible, ready to unconsciously influence their strategic thinking in their new role. Your competitive intelligence becomes your competitor's competitive intelligence, not through industrial espionage but through the natural portability of personal AI accounts.

The strategic risk extends beyond individual employee mobility to broader competitive intelligence gathering. In an era where competitive intelligence firms are increasingly sophisticated, personal AI accounts create new attack surfaces. Social engineering doesn't need to compromise your corporate email anymore—it can target personal AI accounts that employees use for work. Credential stuffing attacks that compromise personal accounts don't just expose personal information—they expose business strategy.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

The Path Forward: Enterprise AI Solutions with Proper Governance

The solution to shadow AI isn't banning personal AI accounts—that's neither realistic nor effective. Employees will use the tools that help them do their jobs, with or without permission. The solution is providing legitimate, properly governed alternatives that are as convenient as personal accounts but designed for business use with appropriate security, compliance, and administrative controls.

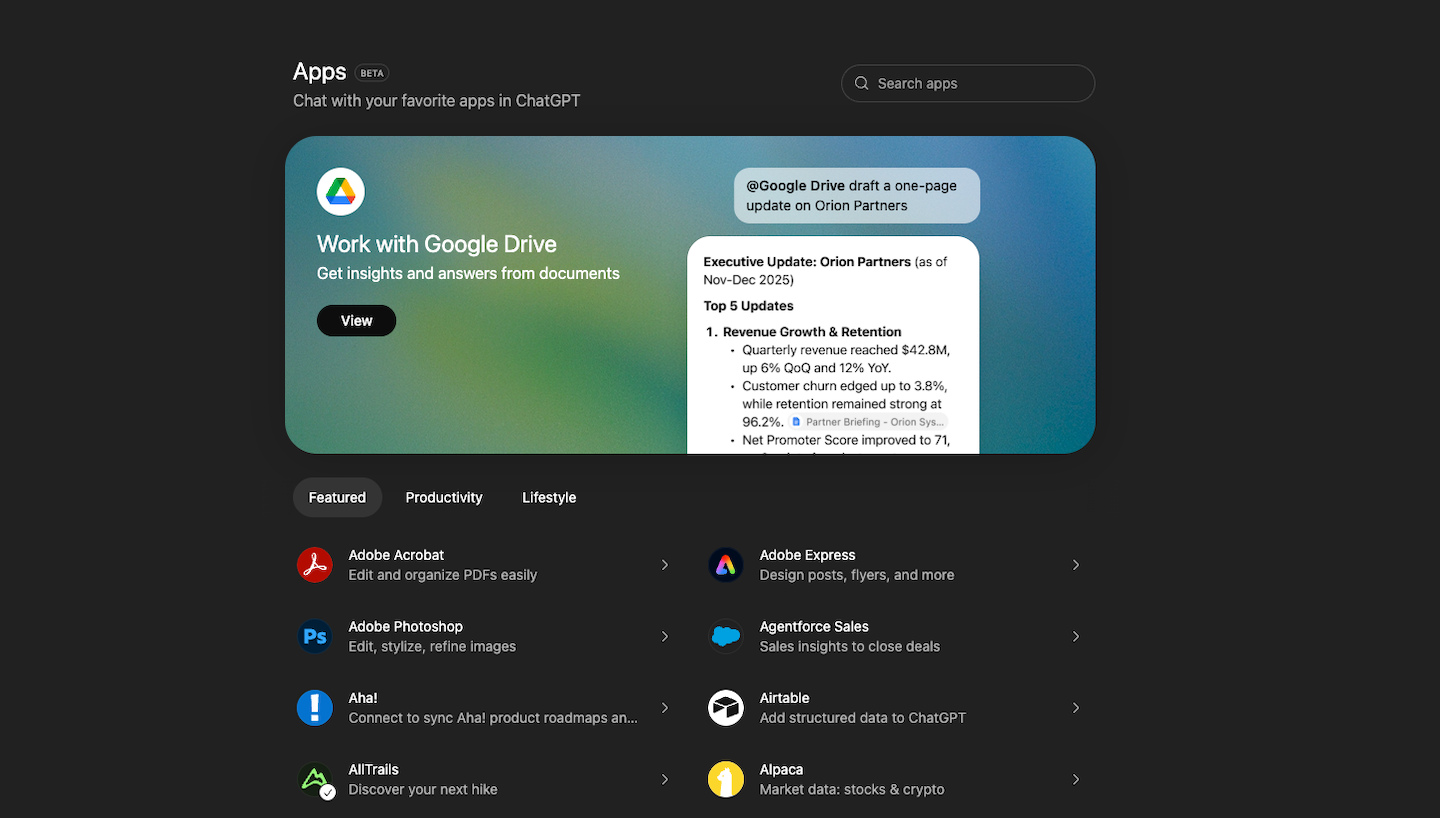

Several enterprise AI platforms have emerged to address exactly this need, offering the capabilities employees want with the governance organizations require:

Microsoft 365 Copilot represents the natural evolution for organizations already invested in the Microsoft ecosystem. Copilot integrates directly into Word, Excel, PowerPoint, Outlook, Teams, and other Microsoft 365 applications, combining large language models with your work context from Microsoft Graph. It includes Enterprise Data Protection (EDP) that ensures data stays within Microsoft 365 boundaries, supporting GDPR and other compliance standards. With GPT-5 now available in Microsoft 365 Copilot, organizations get access to the latest AI capabilities with enterprise-grade security. For organizations at $30 per user per month, Copilot provides enterprise-grade AI capabilities with proper data governance and the convenience of integration with tools employees already use daily.

Google Workspace with Gemini offers a similar approach for organizations in the Google ecosystem. As of January 2025, Google now includes AI features in Workspace Business and Enterprise plans, making enterprise AI more accessible. Your data is not used to train Gemini models or for ads targeting, you maintain control over your content, and Gemini only retrieves data the user has permission to access. Gemini attained comprehensive privacy and security certifications including ISO 42001, SOC 1/2/3, and can help meet HIPAA compliance. Google's Gemini 2.5 Flash and Gemini 2.5 Pro models provide state-of-the-art AI capabilities with proper enterprise governance. For organizations already using Google Workspace, this represents a seamless path to governed AI adoption.

ChatGPT Enterprise and ChatGPT Team provide OpenAI's latest GPT-5 capabilities with business-appropriate controls. ChatGPT Enterprise offers customer prompts and company data that are not used for training OpenAI models, data encryption at rest (AES 256) and in transit (TLS 1.2+), and includes an admin console for managing team members with domain verification, SSO, and usage insights. ChatGPT Team starts at $30 per month per user (minimum 2 users) for smaller organizations, while Enterprise provides custom pricing with enhanced capabilities for larger deployments. Both offer the GPT-5 capabilities employees already know but with proper business data handling.

Claude for Enterprise and Claude Team from Anthropic provides another enterprise option with particular strength in longer context windows and the Claude Sonnet 4.5 model. The Enterprise plan introduces single sign-on (SSO) and domain capture, role-based access with fine-grained permissioning, audit logs for security and compliance monitoring, and System for Cross-domain Identity Management (SCIM) for automated user provisioning. Claude for Enterprise offers an expanded 500K context window and native GitHub integration for engineering teams to sync repositories. Claude Team starts at $30 per month per user (minimum 5 users), while Enterprise offers custom pricing and enhanced capabilities for larger organizations.

Beyond these major platforms, organizations should consider AI governance solutions that provide oversight across multiple AI tools. These solutions create policy enforcement, monitor AI usage, and provide audit trails regardless of which AI platform employees use. For organizations that need to support multiple AI providers or that want to maintain flexibility as the AI landscape evolves, governance platforms provide an abstraction layer that separates policy enforcement from specific tools.

Beyond Cloud Platforms: On-Premises and Decentralized AI Solutions

While cloud-based enterprise AI platforms address the needs of most mid-market companies, some organizations face requirements that demand even greater control over their data and infrastructure. For companies in highly regulated industries, those handling extremely sensitive intellectual property, or organizations with strict data sovereignty requirements, on-premises deployment or emerging decentralized alternatives may be necessary—and increasingly viable.

On-Premises Self-Hosted LLM Deployments represent the maximum control option. In heavily regulated industries like healthcare and finance, companies need the ability to self-host open-source LLM models to regain control of their own privacy. On-premise LLMs process all data within private infrastructure, meaning nothing leaves the environment, which helps meet data protection requirements under GDPR, HIPAA, and CCPA.

The landscape for self-hosted AI has transformed dramatically in recent years. Open-source models like Meta's Llama, Mistral, and others now rival proprietary models in capability while being fully deployable on-premises. With the release of Meta Llama 3.1, open-source models have become powerful enough to rival proprietary counterparts such as GPT-5, Claude Sonnet 4.5, and Gemini 2.5 Pro. This means organizations no longer face a binary choice between capability and control—they can have both.

On-premises deployment isn't just about security—it's about total sovereignty. Your data never leaves your infrastructure. You control every aspect of the deployment, from model selection to fine-tuning. You're not subject to API rate limits, usage caps, or provider policy changes. For organizations where these factors matter more than absolute cutting-edge capability, on-premises deployment provides a compelling solution.

The challenges are significant but increasingly manageable. Deploying LLMs on-premise requires high-performance GPUs such as NVIDIA A100, H100, or L40 GPUs, depending on the model size and throughput requirements, hosted in local data centers or private cloud clusters with appropriate cooling, networking, and storage. Organizations need technical expertise to deploy, maintain, and optimize these systems. But for companies already operating significant IT infrastructure or those in sectors where data sovereignty is non-negotiable—defense, intelligence, certain financial services, healthcare organizations with particularly sensitive data—these trade-offs are worthwhile.

Blockchain-Based Decentralized AI Solutions represent an emerging alternative focused on transparency, data sovereignty, and resistance to centralized control. The Internet Computer Protocol (ICP) has pioneered the ability to run AI models entirely on blockchain infrastructure, creating what they call "on-chain AI."

DecideAI, a web3 company focused on creating AI models, announced a partnership with Internet Computer (ICP) to bring the GPT-2 LLM language onto the blockchain. ICP supports smart contracts that can store AI models up to 500GiB, and provides an in-browser AI chatbot that uses an open-source LLM model served from ICP. Projects like ELNA AI, DeVinci, and ArcMind AI are building fully on-chain AI agents and assistants that operate within decentralized infrastructure.

Let's be clear about what blockchain-based AI offers and what it doesn't. These solutions aren't competing with GPT-5 or Claude Sonnet 4.5 on raw capability—the models running on blockchain infrastructure tend to be smaller and less sophisticated due to computational constraints. Currently, smart contracts can run small AI models like ImageNet for onchain image classification, with short-term improvements aimed at decreasing latency and supporting larger models. The long-term vision involves GPU compute on blockchain, but today's reality is more modest.

What blockchain-based AI does offer is a unique value proposition for specific use cases: verifiable AI execution where the entire AI interaction happens on transparent, tamper-proof infrastructure. This matters for applications where proving that AI wasn't manipulated, demonstrating data wasn't leaked, or ensuring continued operation regardless of any single provider's decisions is more important than having the absolute best AI capability.

Consider scenarios where blockchain AI's transparency and decentralization create unique value:

Regulated applications requiring proof of AI behavior: Organizations that need to demonstrate to regulators or auditors exactly how their AI systems operated, with cryptographically verifiable records that can't be altered retroactively.

Multi-party AI applications requiring trust neutrality: Situations where multiple organizations need to use AI together but don't trust each other or any central provider—consortium research, competitive collaboration, or cross-organization workflows where blockchain's trust-neutrality provides the necessary foundation.

Applications requiring AI continuity regardless of provider viability: Systems that need to continue operating even if specific AI providers shut down, change terms, or restrict access. When deployed on blockchain infrastructure, the AI continues operating as long as the blockchain network exists.

Privacy-focused applications exploring decentralized alternatives: Organizations exploring Web3 architectures who want AI capabilities that align with their decentralized infrastructure philosophy rather than introducing centralized dependencies.

The blockchain AI space is early-stage and evolving rapidly. The models available today won't replace enterprise-grade Claude or ChatGPT for most business applications. But for organizations with specific requirements around transparency, sovereignty, or censorship resistance, these emerging alternatives deserve evaluation. They represent a different philosophy about how AI should be deployed and governed, and for some use cases, that philosophical difference creates practical value.

Choosing Your Path: Matching Solutions to Requirements

The expanding array of enterprise AI options—from major cloud platforms to on-premises deployments to blockchain alternatives—means organizations need frameworks for making appropriate choices rather than defaulting to what's most popular or most hyped.

Start with your requirements, not with solutions:

Data sovereignty and compliance needs: If you operate in jurisdictions with strict data residency requirements, or if regulatory compliance makes demonstrating complete data control essential, on-premises deployment might be mandatory regardless of other considerations. Major cloud platforms (Microsoft 365 Copilot, Google Workspace with Gemini) offer strong compliance certifications and data protection, but they're still third-party infrastructure. For organizations where that distinction matters legally or strategically, self-hosted solutions become necessary.

Integration and convenience priorities: If AI needs to integrate seamlessly with existing productivity tools and if employee adoption is critical, cloud platforms from Microsoft, Google, OpenAI, or Anthropic provide the smoothest path. These solutions meet security and compliance requirements for most mid-market organizations while offering the convenience that drives adoption. The governance they provide is sufficient for most business use cases.

Capability versus control trade-offs: Organizations willing to accept slightly less cutting-edge AI capability in exchange for complete infrastructure control should evaluate on-premises open-source models. The gap between open-source and proprietary models has narrowed dramatically. For many business applications, self-hosted Llama or Mistral models provide more than adequate capability with total data sovereignty.

Emerging use cases with unique requirements: If your organization is exploring AI applications where transparency of execution matters more than raw capability, or where trust-neutral infrastructure is valuable, blockchain-based AI solutions become worth investigating despite their current limitations. These are niche use cases today, but they're real use cases that can't be served by traditional alternatives.

Practical implementation often involves hybrid approaches: Most organizations don't need a single solution for all AI use cases. Sensitive customer data processing might require on-premises deployment, while general productivity assistance works fine on Microsoft 365 Copilot. Proof-of-concept applications might start on cloud platforms and migrate to on-premises infrastructure once validated. The goal isn't finding the one right solution—it's matching solutions appropriately to diverse requirements across your organization.

The key insight is that the shadow AI problem—employees using personal AI accounts because organizations haven't provided appropriate alternatives—can now be solved across the entire spectrum of organizational needs. Whether you need cloud convenience, on-premises control, or emerging decentralized alternatives, properly governed options exist. The question isn't whether to provide employees with legitimate AI tools—it's which combination of properly governed solutions best serves your organization's diverse requirements.

Implementation: Moving from Shadow AI to Governed Adoption

Selecting an enterprise AI platform solves the technical problem but doesn't solve the adoption problem. Employees won't abandon their personal AI accounts just because you've provided an alternative—they'll abandon them when the alternative is as convenient and more capable for their specific needs. Implementation requires both technical deployment and change management.

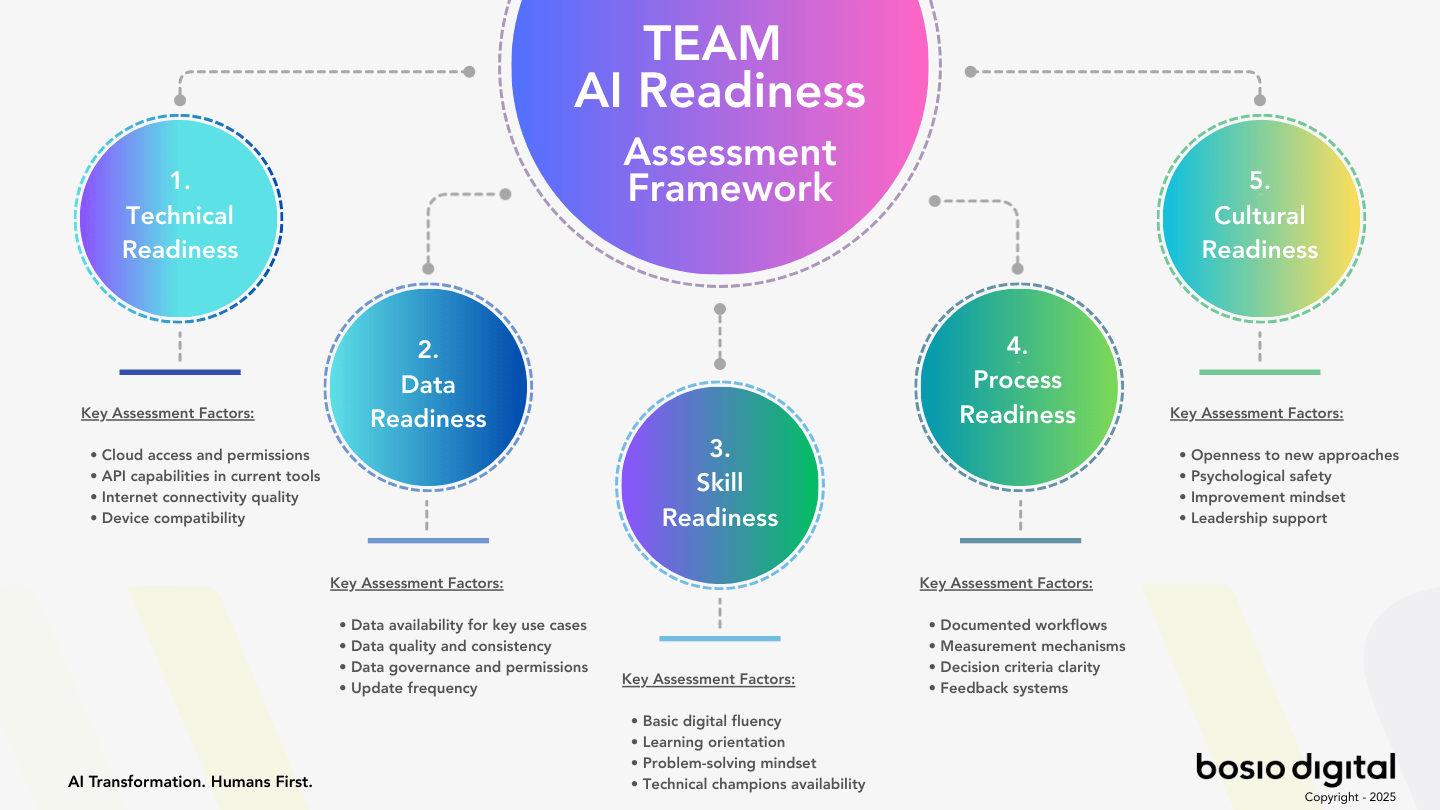

The first step is assessment: understand the current state of shadow AI in your organization. This doesn't mean launching an investigation or punishing employees for using personal accounts. It means honestly assessing what's happening so you can address it effectively. Survey employees anonymously about their AI tool usage. Ask what they're using AI for, what tools they prefer, what problems they're solving, and what concerns they have. This assessment accomplishes two things: it gives you data to inform your implementation, and it signals to employees that you're taking AI governance seriously without being punitive about past use.

The second step is communication: explain why you're implementing enterprise AI and what it means for employees. Frame it as enabling rather than restricting—the goal isn't to prevent AI use but to make it safe, sustainable, and more powerful. Acknowledge that employees have been using personal AI accounts because they're useful and because the organization hadn't provided alternatives. Make clear that you're not punishing past use but establishing proper governance going forward.

The third step is gradual transition: don't expect employees to immediately abandon personal accounts for enterprise alternatives. Implement in phases, starting with the teams or use cases where enterprise AI provides the clearest advantages. Early wins build momentum and create champions who can evangelize enterprise adoption to colleagues.

For highly regulated functions—HR, finance, legal, healthcare—make enterprise AI the only authorized option and enforce it through policy and training. These functions can't afford the compliance risk of personal account use, and employees in these roles typically understand the regulatory context that makes governance necessary.

For other functions, compete for adoption rather than mandating it. Make the enterprise alternative more convenient, more capable, or more integrated with existing workflows than personal accounts. When enterprise AI solves problems personal accounts can't—like accessing internal knowledge bases, integrating with business systems, or collaborating with teammates—employees will naturally migrate because it serves their needs better.

The fourth step is ongoing enablement: provide training, resources, and support to help employees succeed with enterprise AI. Most employees are self-taught AI users with idiosyncratic approaches that work for them but might not represent best practices. Formalize and share effective techniques. Create prompt libraries for common business use cases. Document workflows that combine AI with existing business processes. The goal is making enterprise AI not just as good as personal accounts but demonstrably better because it's supported, optimized, and integrated with business context.

The fifth step is measurement and iteration: track adoption, usage patterns, and business outcomes. Which teams are getting value? What use cases are emerging? Where are employees still using personal accounts and why? Use this data to refine your implementation, add capabilities, and demonstrate ROI. Enterprise AI adoption isn't a one-time project—it's an ongoing program that evolves as AI capabilities improve and as your organization discovers new applications.

Building a Sustainable AI Governance Framework

Beyond immediate implementation, organizations need sustainable governance frameworks that address not just current shadow AI risks but future AI adoption challenges. As AI capabilities expand and new AI tools emerge, governance frameworks provide the structure to evaluate, approve, and integrate new capabilities without repeating the shadow AI problem.

Effective AI governance starts with clear policies about when and how AI can be used for business purposes. These policies should specify:

Approved AI tools and platforms: Which AI systems have been evaluated and approved for business use? What specific capabilities does each provide? Employees need clear guidance about what they can use rather than vague prohibitions about what they can't.

Data handling requirements: What types of data can be shared with AI systems? What approvals are required for sensitive data? How should employees sanitize or anonymize data when using AI? Employees want to do the right thing—they need practical guidance about what that means in specific contexts.

Use case boundaries: What business functions are appropriate for AI assistance? Where should AI augment human decision-making versus making autonomous decisions? These boundaries will evolve as AI capabilities improve and as organizations gain experience, but having explicit policies creates a foundation for responsible AI use.

Roles and responsibilities: Who approves new AI tools? Who monitors AI usage? Who responds when problems arise? Clear accountability prevents governance from being everyone's responsibility (which means it's no one's responsibility) and ensures that AI governance gets the attention it requires.

Beyond policies, effective governance requires technical controls that make compliance easy and violations difficult. This includes:

Single sign-on integration that makes approved AI tools as convenient to access as personal accounts—employees shouldn't face friction when doing the right thing.

Data loss prevention that prevents sensitive data from leaving your organization's security perimeter, even if employees attempt to share it with unauthorized AI tools.

Usage monitoring that provides visibility into how approved AI tools are being used, enabling you to identify both problems (potential misuse) and opportunities (emerging use cases worth formalizing).

Access controls that ensure employees can only use AI with data they're authorized to access, preventing inadvertent exposure of sensitive information through AI conversations.

Finally, effective governance requires ongoing education that helps employees understand not just the rules but the reasoning behind them. When employees understand why personal AI accounts create risks—not in abstract terms but through concrete examples relevant to their work—they become allies in governance rather than obstacles to overcome. When they understand how enterprise AI solutions protect them as well as the organization—by ensuring their work product isn't exposed if their personal account is compromised—they see governance as enablement rather than restriction.

Conclusion: From Liability to Strategic Asset

The shadow AI problem isn't primarily about technology—it's about the gap between organizational policy and employee reality. You weren't using personal AI accounts to circumvent security or defy policy. You were using them because AI genuinely helps you do your job better, and your organization hadn't provided legitimate alternatives. This isn't malicious; it's inevitable.

The companies that will succeed in the AI era aren't those that ban personal AI use or those that ignore it and hope for the best. They're the companies that provide properly governed enterprise alternatives that are as convenient and capable as personal accounts but designed for business use with appropriate security, compliance, and administrative controls.

The path forward is clear: acknowledge that AI use is happening, understand the risks it creates, provide legitimate alternatives, and build governance frameworks that enable rather than restrict. This isn't about preventing AI adoption—it's about channeling AI adoption in directions that create value for your organization without exposing you to unnecessary risk.

You were trying to do your job better when you refined that competitive strategy using your personal ChatGPT account. Give yourself Claude for Enterprise or Microsoft 365 Copilot with proper governance, and you can continue refining strategies without creating intellectual property exposure. Your sales team analyzing customer data can keep generating insights without compliance violations. Your CFO building financial models can maintain analytical capabilities without data security risks. Your engineering lead working through technical decisions can continue leveraging AI without exposing your product roadmap.

Moving from shadow AI to governed enterprise adoption isn't just about reducing risk—though it does that. It's about transforming AI from a hidden liability into a strategic asset that every employee can use safely, effectively, and in ways that compound organizational capabilities rather than fragmenting them across personal accounts. The companies that make this transition quickly and effectively will find themselves with a significant competitive advantage: they'll have empowered their entire workforce with AI capabilities that their competitors are still debating, with governance that their competitors are still struggling to implement, and with organizational learning that accumulates and amplifies over time rather than remaining trapped in personal accounts.

The choice isn't whether you'll use AI—you already are. The choice is whether that use creates strategic value and manageable risk through proper governance, or continues creating hidden liabilities through personal accounts you can't monitor, can't protect, and can't control.