Most AI consultants will tell you adoption is a technology problem. They're wrong.

The real issue was stated plainly in a recent Harvard Business Review article: "Most firms struggle to capture real value from AI not because the technology fails—but because their people, processes, and politics do."

The data backs this up. According to the RAND Corporation, more than 80 percent of AI projects fail—twice the rate of failure for IT projects that don't involve AI. MIT's NANDA initiative found that despite $30-40 billion in enterprise spending on generative AI, 95% of organizations are seeing zero measurable business return. And S&P Global reports that 42% of companies abandoned most of their AI initiatives in 2025, up from just 17% the year before.

These aren't technology failures. They're human failures. Organizational failures. Leadership failures.

The companies succeeding with AI aren't the ones with the best algorithms or the biggest budgets. They're the ones who understand a simple truth: AI adoption is fundamentally a human problem. And solving human problems requires putting humans first.

That's not idealism. It's the only strategy that actually works.

The Problem: AI Adoption Has a Human Problem

Let's be honest about what's happening. Organizations are spending billions on AI tools, running countless pilots, and generating impressive demos—then watching it all stall before delivering real value.

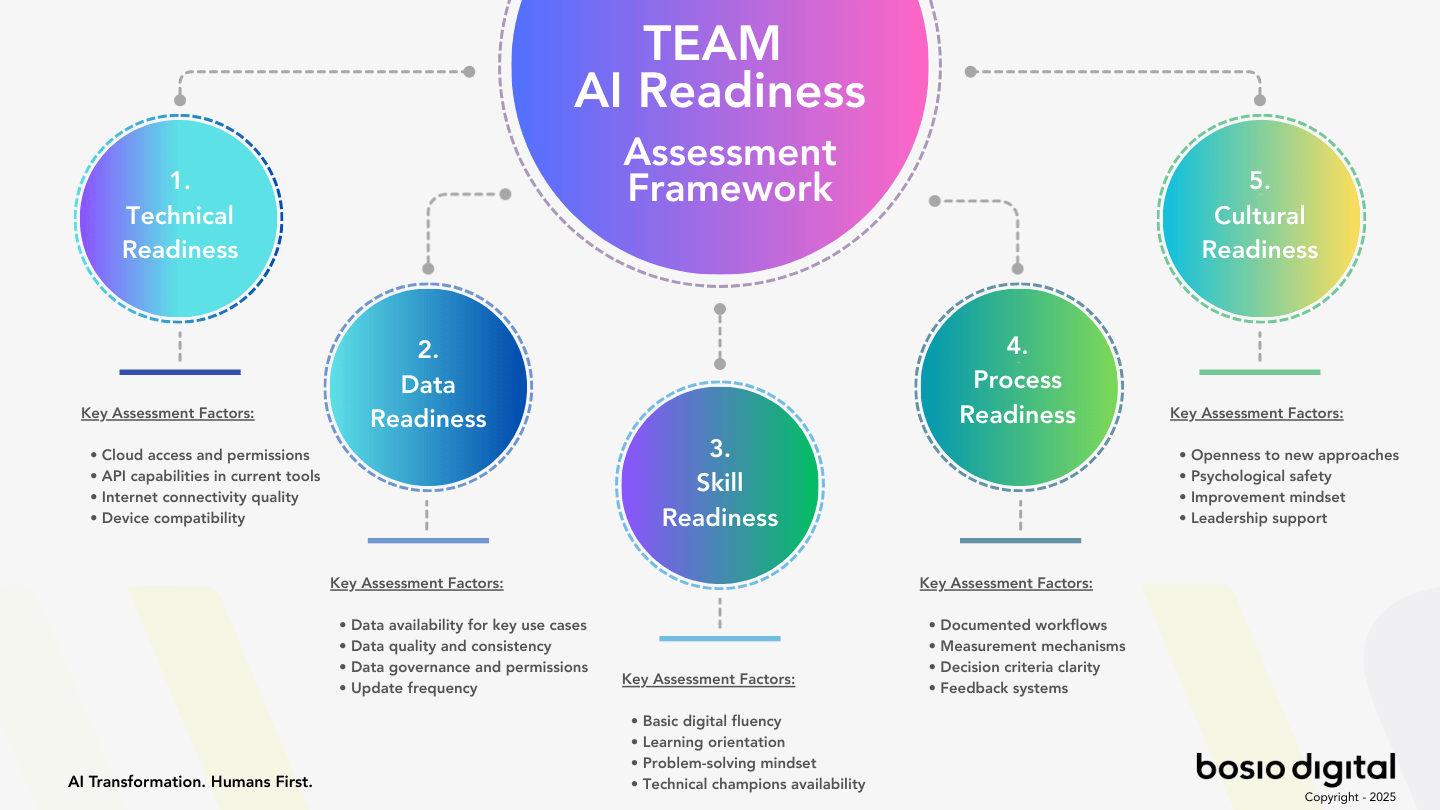

The RAND Corporation's comprehensive study found that AI projects fail at twice the rate of traditional IT projects. Not because AI technology is immature. Not because the use cases aren't valid. But because of five distinctly human and organizational problems:

First, stakeholders misunderstand or miscommunicate what problem actually needs to be solved. Second, organizations lack the data—but more importantly, lack the organizational processes to manage that data. Third, companies focus on using the latest technology rather than solving real problems for their intended users. Fourth, organizations lack the infrastructure and processes to deploy completed AI models. And fifth, AI gets applied to problems that are genuinely too difficult—a judgment call that requires human wisdom, not just technical assessment.

Notice what's not on that list: "The AI didn't work."

The MIT NANDA report puts an even finer point on it. Only 5% of AI pilot programs achieve rapid revenue acceleration. The rest stall, delivering little to no measurable impact. And here's the key finding: how companies adopt AI matters more than which AI they adopt. External vendor partnerships succeed about 67% of the time, while internal builds succeed only one-third as often. Why? Because external partnerships force organizations to confront their readiness. They can't just throw technology at the problem.

The Fear Equation

Underneath these organizational failures lies something more fundamental: fear.

NTT DATA research found that in 2021, 37% of employees were more concerned than excited about AI. By 2023, that number had jumped to 52%. The percentage who said they were excited dropped from 18% to just 10%.

Think about what this means for adoption. More than half your workforce approaches AI with more concern than excitement. And that concern isn't irrational—they're reading the same headlines about automation and job displacement that everyone else is reading.

When employees don't trust a tool, they don't just fail to embrace it. They actively work against it. They find workarounds. They generate reasons why the AI output isn't trustworthy. They quietly sabotage implementation by simply not using the system, or using it in ways that don't capture value.

Recent research published in Harvard Business Review revealed something even more troubling: a "hidden penalty" for using AI at work. When reviewers believed an engineer had used AI assistance, they rated that engineer's competence 9% lower—even when evaluating identical work. The penalty was more severe for women and older workers.

This creates a vicious cycle. Organizations push AI adoption. Employees fear being seen as less competent if they use it. Adoption stalls. Organizations push harder. Resistance increases.

The Leadership Blind Spot

There's a fundamental disconnect between how executives see AI and how employees experience it.

From the C-suite, AI looks like efficiency gains, competitive advantage, and bottom-line improvement. From the frontline, it looks like a threat to livelihood, identity, and professional value.

Harvard Business School professors recently wrote that too many AI initiatives "failed to scale or create measurable value... because they lacked the organizational scaffolding to bridge technical potential and business impact." Technology enables progress, they noted, "but without aligned incentives, redesigned decision processes, and an AI-ready culture, even the most advanced pilots won't become durable capabilities."

This is why change management is usually treated as an afterthought—and why that approach is fatal to AI adoption. Leaders see a technology deployment. What they're actually attempting is an organizational transformation. And you can't transform an organization by installing software.

The MIT research found that one of the key factors separating AI success from failure was empowering line managers—not just central AI labs—to drive adoption. This makes sense. Line managers understand the workflows, the resistance points, the real concerns of their teams. Central labs understand the technology. Without both, you get pilots that never scale.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

The "Humans First" Philosophy

"Humans First" sounds like a slogan. It's not. It's a design principle that changes every decision in an AI transformation.

The core idea is simple: AI should extend human capability, not replace human contribution. The goal isn't to make humans unnecessary. It's to automate the mundane so humans can do what humans do best—the work that actually creates value, differentiation, and meaning.

This reframes the entire conversation. Instead of asking "how can AI do this job?" we ask "how can AI make the people doing this job extraordinary?"

The Hierarchy of Human Work

Not all work is created equal. When we look at what people actually do in organizations, it falls into three broad categories:

Drudgery is the repetitive, time-consuming work that drains energy without engaging talent. Data entry. Manual reporting. Routine communications. Status updates. Information gathering across systems. This is work that has to be done, but doing it well doesn't distinguish anyone. It's maintenance, not creation.

Craft is skilled work that requires judgment, expertise, and problem-solving. Analysis. Complex decision-making. Skilled execution of professional expertise. This work benefits from AI assistance, but humans need to lead. The AI can accelerate and augment, but the human judgment is what creates quality.

Meaning is work that only humans can do. Building relationships. Creative insight. Ethical judgment. Presence with other humans in moments that matter. Strategic thinking that requires understanding context, politics, and human nature. This is where human value is irreplaceable.

A "Humans First" approach to AI automates drudgery, augments craft, and protects space for meaning. It doesn't try to replace human judgment in high-stakes decisions. It doesn't pretend that AI-generated "creativity" is the same as human creative insight. It recognizes that human connection—with customers, with colleagues, with stakeholders—is a competitive advantage that AI can support but never replace.

The Competitive Advantage Flip

There's an old way of thinking about AI that goes like this: AI + fewer humans = efficiency. Reduce headcount, reduce costs, improve margins.

This thinking isn't wrong, exactly. But it misses the bigger opportunity.

The new equation is: AI + empowered humans = differentiation.

When your marketing team spends 15 hours a week generating reports manually, they have 15 fewer hours for strategy. When your sales team spends 20 hours a week on data entry and CRM updates, they have 20 fewer hours for customer relationships. When your operations team spends 10 hours a week chasing information across systems, they have 10 fewer hours for process improvement.

AI can give that time back. But only if the humans are still there to use it.

The companies that win won't be the ones that replaced the most humans with AI. They'll be the ones whose humans, augmented by AI, consistently outperform competitors who took the efficiency-only approach.

Your people plus AI beats AI alone. Every time.

What "Humans First" Looks Like in Practice

This philosophy has to translate into concrete decisions:

Use case selection: Before greenlighting an AI initiative, ask: does this elevate the humans doing this work, or diminish them? If AI will handle the drudgery and free people for higher-value work, proceed. If AI will hollow out meaningful work and leave people feeling like button-pushers, reconsider.

Workflow design: Decide explicitly where humans stay in the loop. High-stakes decisions should remain with humans. Edge cases should escalate to humans. The AI handles volume and routine; humans handle judgment and exceptions.

Communication: Position AI internally as an amplifier, not a replacement. This isn't spin—it's strategy. The language you use shapes how employees perceive the change, which shapes whether they adopt or resist.

Metrics: Track human impact alongside business metrics. Time saved on routine tasks. Employee satisfaction with new workflows. Quality of human judgment in the remaining decisions. Increase in time spent on high-value work. These aren't soft metrics—they're leading indicators of sustainable adoption.

Why This Works (And Why "Efficiency First" Doesn't)

The psychology of adoption is straightforward: when people feel elevated, they adopt enthusiastically. When people feel threatened, they resist ingeniously.

This is human nature. And you can either work with it or against it.

NTT DATA's research put it bluntly: "If employees don't trust a concept or a tool, not only will they fail to embrace it—they'll actively work against it." Trust is multi-variate. Employees need to trust the organization to do the right thing, trust the people governing the AI to act responsibly, and trust the output of the models to be reliable.

The "efficiency first" approach—deploying AI to reduce headcount and cut costs—destroys trust at all three levels. Employees don't trust organizations that view them as costs to cut. They don't trust AI governance led by people focused on replacement. And they don't trust AI outputs when their colleagues' jobs are being eliminated based on those outputs.

The "Humans First" approach builds trust at all three levels. The organization is demonstrably investing in employee success, not just shareholder returns. Governance is oriented around elevation, not replacement. And AI outputs are tools that make employees better at their jobs, not evidence in a case for their elimination.

The difference between compliance and commitment matters enormously here. Compliance means people use the AI because they're told to. Commitment means people use the AI because they want to—because it makes their work better.

Compliant users do the minimum. Committed users discover new applications, improve workflows, and drive the continuous improvement that turns a pilot into an enterprise capability. You can mandate compliance. You cannot mandate commitment. And without commitment, AI adoption stalls.

The Sustainability Argument

Efficiency-only approaches create brittle systems.

When you design AI workflows that minimize human involvement, you're optimizing for normal operations. But organizations don't operate in normal conditions. Markets shift. Customers have unusual requests. Crises emerge. Edge cases multiply.

Human judgment provides resilience. Humans can recognize when the AI is wrong. Humans can adapt to situations the training data didn't anticipate. Humans can make ethical calls when the algorithm produces a technically correct but obviously wrong answer.

Organizations that hollow out human contribution lose this resilience. They become dependent on AI systems that work until they don't—and when they don't, there's nobody left who understands how to intervene.

This is before considering the competitive implications. If your strategy is to replace humans with AI, you're pursuing the same strategy as every other company with access to the same AI tools. There's no differentiation in that. The differentiation comes from humans using AI in ways your competitors haven't figured out yet.

The Talent Retention Factor

Top performers don't want to be replaced. They want to be amplified.

The best people in your organization have spent years developing expertise, judgment, and capabilities. They're not looking for roles where AI does the interesting work while they handle the exceptions. They want AI to handle the tedious work while they do more of what they're best at.

A "Humans First" approach is a recruiting and retention message. It says: we value human talent here. We're investing in AI to make talented people more effective, not to make talented people unnecessary.

The alternative message—efficiency first, humans as costs to cut—drives top performers toward organizations that value them. And it attracts employees who are comfortable being managed out of their jobs over time. That's not the talent base that wins in competitive markets.

The Mindful AI Foundation

Here's where I need to acknowledge something unusual about my background.

I've spent 25+ years in digital transformation consulting, working with global brands on complex technology adoptions. But I've also spent 25+ years in deep study and practice of human development—understanding how people actually change, resist, grow, and transform.

This isn't a common combination in AI consulting. Most consultants come from technology backgrounds or strategy backgrounds. They understand systems, processes, and business cases. What they often don't understand—because they haven't studied it or lived it—is the inner experience of transformation.

When you've spent decades working with how humans actually change, you learn things that don't show up in change management frameworks:

Resistance is natural, not pathological. The instinct to resist change isn't a bug in human nature—it's a feature. It protects us from unnecessary disruption. Working skillfully with resistance means understanding what it's protecting and addressing that, not overwhelming it with force.

Psychological safety is prerequisite to change. People don't experiment when they're afraid. They don't admit confusion when they're worried about looking incompetent. They don't adopt new tools when they fear those tools will be used against them. Creating the conditions for transformation requires creating safety first.

Sustainable change requires patience. The organizational equivalent of a crash diet doesn't work any better than actual crash diets. Forced adoption creates resistance that emerges later. Genuine transformation happens at the pace people can actually integrate change—which is slower than executives typically want, but much more durable.

Understanding has to come before action. Most AI implementations jump to solutions before genuinely understanding the problem, the people affected, and the organizational dynamics at play. Deep listening—actually hearing what people are saying and what they're not saying—precedes effective intervention.

This foundation shapes everything in our approach to AI adoption.

Change management grounded in how humans actually transform. Not templates and communication plans, but genuine understanding of what drives resistance and what creates openness. Working with fear, not against it.

Communication that addresses real fears, not surface objections. When someone says "the AI output isn't reliable," they might mean exactly that. Or they might mean "I'm afraid this tool is going to eliminate my job." Effective communication requires hearing the real concern.

Design that honors human dignity, not just efficiency. Every AI workflow either increases meaningful work or decreases it. Every implementation either elevates human capacity or diminishes it. These aren't side considerations—they're central design criteria.

Leadership that holds both business results and human flourishing. The AI transformation leader's job isn't just to hit adoption metrics. It's to guide an organization through change in a way that people can thrive in—which turns out to be the path that produces the best business results anyway.

This is why our approach achieves adoption rates of 70%+ within 90 days and employee satisfaction scores of 80%+. Not because we have better technology partners or fancier frameworks. Because we understand the human dynamics that make or break AI adoption, and we design the entire transformation around those dynamics.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

The Implementation Reality

Philosophy matters, but implementation is where it becomes real. Here's what "Humans First" looks like in practice.

Champion Programs Over Top-Down Mandates

The MIT research found that empowering line managers—not central AI labs—was a key factor in AI success. We've found that going even further, building champion networks at multiple levels, creates the grassroots momentum that top-down mandates can never generate.

Our champion model has three tiers:

Executive Champions provide strategic alignment and visible sponsorship. Their commitment signals to the organization that this matters.

Operational Champions provide team-level implementation support. They're the bridge between leadership and frontline, translating strategy into practical reality.

Power Users provide peer training and support. They're colleagues helping colleagues, which builds trust that no training program can create.

This structure creates momentum that's distributed, not dependent on a single leader or team. When champions are genuinely enthusiastic—because AI is actually making their work better—that enthusiasm spreads.

Communication That's Honest About Challenges

Most AI communication is either techno-utopianism (AI will solve everything!) or forced positivity (this is great for everyone!). Neither builds trust.

Effective communication acknowledges challenges while inspiring about possibilities. It addresses the fear directly: yes, AI is changing work. Here's how we're ensuring that change elevates you rather than threatens you. Here are the specific decisions we've made to protect meaningful work. Here's what we're committing to.

This means being clear about what AI will and won't do. It means being honest about the learning curve. It means acknowledging when something isn't working and course-correcting publicly.

The goal isn't to convince skeptics that they shouldn't be skeptical. It's to demonstrate through action that their concerns are being heard and addressed.

Training Designed for Different Roles and Concerns

Generic AI training fails because different people have different concerns, different starting points, and different relationships to the technology.

Executives need strategic context: what's possible, what's risky, what decisions need to be made, how to think about AI in their domain.

Managers need implementation skills: how to support teams through the transition, how to identify and address resistance, how to measure progress.

End users need practical competence: how to use the specific tools, how to recognize when AI output needs human review, how to integrate AI into daily workflows.

Champions need deep understanding: all of the above, plus how to train and support others.

Metrics That Include Human Impact

If you only measure adoption rates and efficiency gains, you'll optimize for adoption rates and efficiency gains. That often means forcing adoption and cutting headcount—which creates the backlash that kills long-term success.

We track human impact alongside business metrics:

- Time saved on routine tasks

- Time redirected to high-value work (not just saved, but actively reallocated)

- Employee satisfaction with AI-assisted workflows

- Quality of human judgment in AI-augmented decisions

- Employee perception of role elevation vs. diminishment

- Voluntary usage (people using AI because they want to, not because they're required to)

These aren't feel-good metrics. They're leading indicators. If employees are satisfied with AI-assisted workflows, adoption will sustain and expand. If they feel diminished, resistance will grow until the implementation fails.

The Case Against "Move Fast and Break Things"

There's pressure to move fast on AI. Competitors are investing. The technology is advancing. Waiting feels like falling behind.

But speed without wisdom backfires.

The RAND study found that AI projects fail when organizations focus more on using the latest and greatest technology than on solving real problems for their intended users. The MIT research found that 95% of pilots never scale—they stall in pilot purgatory, impressive demos that never become operational capabilities.

Moving fast without organizational readiness doesn't create speed. It creates expensive pilots that fail, cultural damage that takes years to repair, and cynicism about future AI initiatives. You can't move fast if you have to overcome the organizational scar tissue from the last failed initiative.

What Sustainable AI Transformation Looks Like

Sustainable transformation is fast enough to stay competitive, thoughtful enough to bring people along, and integrated enough to actually stick.

This means investing in organizational readiness before sophisticated AI deployment. It means building capability progressively—quick wins that demonstrate AI as ally, then more complex implementations once trust is established. It means designing for human adoption from the start, not treating it as a problem to solve after the technology is deployed.

The companies that win the AI race won't be the ones who started fastest. They'll be the ones who built adoption that scales and sustains.

The Competitive Window

Yes, there's urgency. Competitors who implement AI effectively will set new customer expectations, attract top talent, and operate more efficiently. Waiting isn't a strategy.

But neither is rushing. The companies that win will be those who move fast and bring their people with them. Speed without adoption isn't speed—it's waste.

The window is real. Use it wisely.

An Invitation

Every organization faces a choice in how they approach AI:

You can treat AI as a technology to deploy, or as a transformation to lead.

You can treat employees as obstacles to efficiency, or as partners in evolution.

You can measure success in headcount reduction, or in capability amplification.

These aren't just philosophical preferences. They predict outcomes. The "Humans First" approach—AI that elevates human capability rather than replacing human contribution—is the approach that creates sustainable adoption, organizational resilience, and genuine competitive advantage.

It creates organizations where AI adoption strengthens culture rather than straining it. Where teams embrace AI because it makes their work more meaningful. Where transformation builds capacity rather than burning it.

The question isn't "how fast can we deploy AI?"

The question is "how can AI make our people extraordinary?"

That question leads somewhere different. And somewhere better.

Sascha Bosio and Laura Pretsch are the co-founders of bosio.digital, an AI transformation consultancy specializing in human-centered adoption. With 25+ years of digital transformation experience alongside deep expertise in human development and organizational change, they help mid-market companies implement AI that employees actually embrace.

Resources & References

Research Studies:

- RAND Corporation (August 2024) — "The Root Causes of Failure for Artificial Intelligence Projects and How They Can Succeed" https://www.rand.org/pubs/research_reports/RRA2680-1.html

- MIT NANDA Initiative (July 2025) — "The GenAI Divide: State of AI in Business 2025" https://fortune.com/2025/08/18/mit-report-95-percent-generative-ai-pilots-at-companies-failing-cfo/

- S&P Global Market Intelligence (2025) — AI Project Failure Rates Analysis https://www.ciodive.com/news/AI-project-fail-data-SPGlobal/742590/

Harvard Business Review Articles:

- "Overcoming the Organizational Barriers to AI Adoption" — Jin Li, Feng Zhu, Pascal Hua (November 2025) https://hbr.org/2025/11/overcoming-the-organizational-barriers-to-ai-adoption

- "Most AI Initiatives Fail. This 5-Part Framework Can Help." — Ayelet Israeli, Eva Ascarza (November 2025) https://hbr.org/2025/11/most-ai-initiatives-fail-this-5-part-framework-can-help

- "Research: The Hidden Penalty of Using AI at Work" — Oguz A. Acar et al. (August 2025) https://hbr.org/2025/08/research-the-hidden-penalty-of-using-ai-at-work

Industry Analysis:

- NTT DATA (2024) — "Between 70-85% of GenAI Deployment Efforts Are Failing" https://www.nttdata.com/global/en/insights/focus/2024/between-70-85p-of-genai-deployment-efforts-are-failing