The Number That Should Reshape Your AI Strategy

There's a statistic making the rounds in change management circles that should fundamentally alter how every organization approaches AI adoption:

63% of AI implementation challenges stem from human factors, not technical limitations.

This finding comes from Prosci's comprehensive research studying 1,107 professionals across industries—frontline employees, team leaders, and executives. When researchers asked what's actually blocking AI adoption, nearly two-thirds pointed to people issues, not technology problems.

The implications are significant. For every dollar organizations spend on AI technology, they should probably be spending two on preparing their people. But that's not what most organizations do. They invest heavily in platforms, integrations, and pilots—then express confusion when adoption stalls, ROI disappoints, and employees quietly work around the systems designed to help them.

The technology isn't failing. The human strategy is.

And it's happening everywhere. Walk into almost any mid-market company that launched an AI initiative in the past two years, and you'll find variations of the same pattern: tools that work technically but sit unused, pilots that impressed leadership but never scaled, and employees who've developed elaborate workarounds to avoid the systems they're supposed to embrace.

This isn't a technology problem. It's a human problem. And until organizations treat it as such, the failure rates will continue.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

The Failure Rates Are Getting Worse, Not Better

Despite billions invested in AI capabilities and years of organizational learning, the numbers paint a troubling picture:

Only 48% of AI projects make it into production, according to Gartner research. That means more than half of all AI initiatives die somewhere between proof-of-concept and actual deployment.

30% of generative AI projects will be abandoned after proof of concept by end of 2025, Gartner predicts—not because the technology failed, but because of poor data quality, inadequate risk controls, escalating costs, or unclear business value.

42% of companies abandoned most of their AI initiatives in 2025, according to S&P Global's survey of over 1,000 enterprises—a dramatic spike from just 17% in 2024. In one year, the abandonment rate more than doubled.

Meanwhile, McKinsey's 2025 State of AI report reveals the most troubling paradox of all: 88% of organizations now use AI in at least one business function, but only 6% qualify as "high performers" seeing significant bottom-line impact.

Let those numbers sink in. Nearly nine out of ten organizations have adopted AI. Fewer than one in ten are actually succeeding with it.

Everyone's doing AI. Almost no one's doing it well.

The conventional explanation for these failures focuses on technical factors: data quality issues, integration complexity, security concerns, unclear ROI metrics. And yes, these matter. But they're not the primary cause of failure.

As BCG Senior Advisor Mickey McManus tells clients: "Success is 70% people and ways of working, and only 30% technology."

When you look at what's actually blocking AI adoption, the answer isn't better algorithms or more robust infrastructure. It's the humans who have to use these systems—their fears, their fatigue, their skepticism, and the organizational dynamics that shape their behavior.

Why Do AI Projects Fail? The Human Factors Nobody Wants to Discuss

The AI industry has a vested interest in framing failures as technical problems requiring technical solutions. Buy better data infrastructure. Implement more robust security. Upgrade to the enterprise tier. Hire more data scientists.

But Prosci's research tells a different story entirely.

Technical implementation issues account for only 16% of AI challenges. That's less than half the contribution of human factors. The gap isn't even close.

So what are these human factors that account for 63% of the problem? They fall into several interconnected categories that organizations consistently underestimate or ignore entirely.

Fear, Not Resistance

What executives dismissively label "employee resistance" is usually something far more fundamental: existential fear.

When employees encounter AI in their workplace, they don't see a productivity tool. They see a potential replacement. They see their hard-won skills becoming obsolete. They see decades of expertise being compressed into an algorithm that anyone can operate.

This fear isn't irrational or unfounded. Employees read the same headlines about AI replacing jobs. They've watched colleagues at other companies get laid off after automation initiatives. They understand, perhaps better than leadership realizes, that "efficiency gains" often translates to "doing more with fewer people."

The response to this fear is often subtle but devastating to AI initiatives. Employees don't openly rebel—that would be career suicide. Instead, they engage in quiet non-adoption. They find workarounds. They copy AI outputs into documents, edit them extensively, then paste them back—adding time rather than saving it. They keep their spreadsheets running alongside the new system "just in case." They attend the training sessions, nod along, then return to their desks and continue working exactly as they did before.

Prosci's research found that 38% of AI adoption challenges stem from insufficient training—but the deeper issue isn't skills. It's that training without addressing underlying fears is futile. You're teaching someone to swim while they're convinced the water is full of sharks. Until you address the sharks—real or perceived—the swimming lessons won't take.

Change Saturation and Fatigue

Organizations love to launch initiatives. They're far less skilled at recognizing the cumulative toll these initiatives take on the humans who must absorb them.

Prosci's research reveals an alarming finding: 75% of organizations report they are either nearing, at, or past the change saturation point.

Your employees aren't just dealing with AI. They're dealing with the reorganization from last year, the new CRM that replaced the CRM that replaced the one before that, the shift to hybrid work, the return-to-office mandate, the economic uncertainty, the layoffs in adjacent departments, and now—yet another transformation initiative promising to change everything.

AI isn't landing on fresh, receptive ground. It's landing on teams that are exhausted, skeptical, and increasingly immune to promises that "this will make your life easier."

The research on change saturation shows clear patterns. When employees hit their change capacity limit, they don't just slow down—they develop defensive behaviors. They become skilled at appearing to adopt while actually maintaining the status quo. They learn which metrics leadership tracks and optimize for those while ignoring everything else. They master the art of looking busy with the new system while accomplishing nothing through it.

This isn't malicious. It's self-preservation. When every quarter brings a new initiative, experienced employees learn that most will be abandoned within eighteen months anyway. Why invest real effort in adoption when the smart money says this too shall pass?

The Leadership Vacuum

McKinsey's 2025 research uncovered something that should concern every executive team: CEO oversight of AI governance is the single element most correlated with bottom-line impact from AI initiatives.

The correlation is stark. When CEOs directly own AI governance—not just sponsor it, but own it—organizations capture significantly more value. When boards engage substantively with AI strategy, outcomes improve measurably. (For more on building effective oversight structures, see our AI Governance Framework.)

Yet the reality in most organizations falls far short of this standard:

- Only 28% of organizations have direct CEO involvement in AI governance

- Only 15% of boards receive AI-related metrics

- A staggering 66% of board directors report having "limited to no knowledge or experience" with AI

- Nearly one in three directors say AI doesn't even appear on their board agendas

When leadership treats AI as a technology project to be delegated—something for IT or a newly created "AI team" to handle—employees read the signal with perfect clarity. If this were actually important, the CEO would be visibly involved. If this were actually strategic, the board would be paying attention. The absence of senior engagement communicates that AI is optional, peripheral, something to work on when the "real" priorities are handled.

Gartner's analyst Rita Sallam captured the dynamic precisely: "After last year's hype, executives are impatient to see returns on GenAI investments, yet organizations are struggling to prove and realize value."

The impatience itself becomes part of the problem. Leadership wants AI returns without AI engagement. They want transformation outcomes without transformation involvement. They want the benefits of change without doing the hard work that change requires.

Pilot Purgatory

The "start small" wisdom that dominates AI implementation advice has created an epidemic of pilots that never scale.

The logic seems sound: begin with a limited proof-of-concept, demonstrate value, then expand. Reduce risk by testing before committing. Learn from small experiments before making big investments.

In practice, this approach has produced organizations trapped in what researchers call "pilot purgatory"—an endless cycle of proofs-of-concept that impress in demos but never achieve enterprise adoption.

McKinsey's research reveals that nearly two-thirds of organizations remain stuck in the pilot stage, having not begun scaling AI across the enterprise despite years of experimentation. They've proven the technology works. They haven't proven—or even seriously attempted—that their organizations can absorb it.

The problem with pilots is that they test the wrong things. A successful pilot proves that AI can perform a task in controlled conditions with selected users who volunteered for the experiment. It doesn't prove that your organization has the change capacity to adopt that capability broadly. It doesn't address the fears, fatigue, and leadership gaps that block real adoption. It doesn't build the cultural and structural foundations that meaningful transformation requires.

Gartner predicts 30% of GenAI projects will be abandoned after proof of concept by end of 2025. Not because the pilots failed technically. Because organizations never developed the capability to go beyond them.

Organizational Fragmentation

AI initiatives routinely die in the gaps between organizational silos.

The pattern is predictable. IT owns the technology implementation. HR owns the training. Communications owns the messaging. Operations owns the workflow redesign. Each function executes its piece competently. But nobody owns the integrated whole. Nobody ensures that these pieces connect into a coherent change strategy.

The result is initiatives that work technically but fail organizationally. The AI system is implemented correctly, but employees were never prepared to use it. The training covers the features, but not the mindset shifts required. The communications announce the rollout, but don't address the fears driving resistance. The workflows are redesigned on paper, but nobody changed the incentives that drive actual behavior.

Research consistently identifies "misaligned goals, weak data infrastructure, unclear ownership, and lack of cross-functional support" as the factors that keep companies perpetually stuck in pilot mode. Note that only one of these—data infrastructure—is technical. The rest are organizational.

What Causes AI Implementation to Fail? Lessons from the Research

The organizations that fail at AI adoption share common patterns that have little to do with their technology choices and everything to do with their organizational approach.

Treating AI as a Technology Project

The most fundamental error is categorizing AI adoption as a technology initiative. This framing leads naturally to technology-focused solutions: better platforms, improved integrations, enhanced training on features and functions.

But AI adoption isn't primarily about technology. It's about changing how people work, what they believe about their roles, and how they interact with tools and each other. These are organizational and cultural challenges that require organizational and cultural solutions.

When you treat AI as a technology project, you assign it to technology people. IT departments are excellent at implementing systems. They're not typically equipped to manage organizational change, address employee fears, or navigate political dynamics. The skills that make someone good at system integration don't transfer to the work of helping humans transform how they work.

Optimizing for Speed Over Readiness

Executive impatience consistently undermines AI adoption.

The pressure to show results quickly leads to implementations that skip the foundational work of preparation. Organizations race to deploy pilots, announce initiatives, and demonstrate activity—without building the organizational capacity to absorb what they're deploying.

Speed and readiness exist in tension. Moving fast means accepting that employees won't be fully prepared. Ensuring readiness means accepting that deployment will take longer. Most organizations resolve this tension by prioritizing speed, then expressing surprise when adoption lags.

The research is clear on what happens next. Organizations that rush to deploy spend more time—not less—reaching full adoption. The time they saved in preparation they lose many times over in remediation, re-training, workaround management, and eventual re-implementation.

Communicating at Instead of Engaging With

Most AI communication strategies are fundamentally one-directional. Leadership decides on AI initiatives, then communicates those decisions to the organization. Town halls announce the vision. Emails explain the timeline. Training sessions demonstrate the features.

What's missing is genuine dialogue. Employees learn about AI changes through announcements rather than conversations. Their concerns are addressed in FAQ documents rather than actual exchanges. Their questions are answered by pre-prepared talking points rather than honest engagement.

Employees aren't stupid. They can tell the difference between communication designed to inform and communication designed to manage. When every message feels like it was crafted by legal and approved by PR, trust erodes. When concerns are met with scripted reassurance rather than authentic acknowledgment, skepticism grows.

The organizations that succeed at AI adoption create genuine two-way communication. They don't just announce—they listen. They don't just train—they learn what employees actually need. They don't just manage concerns—they engage with them substantively.

Ignoring the Social Dynamics of Adoption

Individual adoption decisions don't happen in isolation. They're shaped by social context—what colleagues are doing, what seems normal within the team, what behavior is rewarded or ignored.

Gartner's research uncovered a striking finding: 37% of employees don't use AI even when they can, simply because their coworkers aren't using it.

This means adoption strategies that target individuals—training programs, feature communications, usage incentives—miss a crucial dimension. Even if an individual employee is convinced AI would help them personally, they may not adopt if adoption isn't normalized within their social context.

The implications are significant. You can't scale AI adoption by convincing people one at a time. You have to shift social norms. You have to make AI usage visible, normal, and socially validated. You have to create the conditions where using AI is what "people like us" do.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

How to Overcome Employee Resistance to AI: What Actually Works

The research points clearly to what separates successful AI adoption from the more common failures. The patterns are consistent and actionable.

Reframe AI as Elevation, Not Efficiency

The language organizations use to introduce AI shapes how employees perceive it.

When you introduce AI as a way to "increase efficiency" or "reduce costs," employees hear the subtext: do more with less, your job is expensive, we're looking to cut headcount. Even if that's not your intent, that's what employees hear—because that's often what "efficiency" has meant in their experience.

When you introduce AI as a way to eliminate the tedious parts of work so people can focus on what they do best, the emotional equation changes. This framing positions AI as serving employees rather than replacing them. It makes AI adoption feel like an upgrade rather than a threat.

This isn't spin or positioning. It's a fundamental strategic choice about what AI is for in your organization. Are you using AI to reduce headcount, or to elevate capability? Are you automating to cut costs, or to free people for higher-value work? Employees can sense the difference, and they respond accordingly. (This is the core principle behind our Humans First approach to AI transformation.)

The most successful organizations we've worked with are explicit about this choice. They commit publicly to using AI for elevation rather than elimination. They back that commitment with concrete decisions—redeploying freed capacity to growth initiatives rather than reducing workforce. They make "AI elevates our people" a visible, repeated, demonstrated principle rather than a talking point.

Address Fear Before Functionality

You cannot train away fear. You have to address it directly.

This means having honest conversations about what AI will and won't change in your organization. It means acknowledging that some roles will evolve significantly—and being clear about how you'll support people through that evolution. It means creating genuine psychological safety for employees to express concerns without being labeled as resistant or difficult.

The organizations that achieve high adoption rates spend significant time in early phases just listening. Not presenting, not training, not convincing—listening. What are people actually worried about? What have they heard about AI that concerns them? What past experiences shape how they're interpreting this initiative?

This listening has to be genuine, not performative. Employees can tell when their concerns are being collected for a report versus actually heard and considered. They can sense whether their input might actually change something, or whether decisions have already been made and this "engagement" is just change management theater.

When fears are addressed directly—acknowledged as legitimate, engaged with honestly, met with concrete commitments—adoption resistance decreases measurably. Employees don't need every fear resolved. They need to feel that their fears are taken seriously.

Build Champions, Not Just Users

Because adoption spreads socially, your strategy can't just target individuals. It has to create the conditions for social proof and peer influence. (For a deeper dive on this approach, see From Skeptics to Champions: Orchestrating Organizational Change.)

The most effective approach is a structured champion model that creates AI advocates at multiple levels:

Executive Champions: Senior leaders who don't just sponsor AI initiatives but visibly use AI themselves. When employees see the CFO actually using AI tools—not just talking about them in town halls—it signals that AI is genuinely valued at the top.

Operational Champions: Middle managers and team leads who translate organizational strategy into daily practice. These champions understand both the strategic intent and the operational reality. They can adapt implementation to local contexts and troubleshoot adoption barriers as they emerge.

Power Users: Peer-level experts who provide on-the-ground support and make AI usage normal within their teams. When a dispatcher sees a respected colleague using the scheduling AI—and getting better results—the calculation changes from "management is forcing this" to "this might actually help me."

This champion network doesn't replace formal training, but it transforms how that training lands. Employees don't just learn features in a classroom—they see peers using those features successfully in real work. They have people to ask when they get stuck. They have social proof that adoption is both possible and beneficial.

Go Comprehensive, Not Incremental

Here's a counterintuitive finding from Prosci's research: larger, more comprehensive AI initiatives tend to go smoother than smaller, incremental ones.

The conventional wisdom says to start small—limit risk, prove value, then expand. But research suggests this approach often backfires.

Why? Because treating AI as a minor workflow adjustment misses the cultural and structural changes necessary for meaningful adoption. You're trying to sneak transformation past the organization instead of engaging with it directly. You're implementing a tool when what you need is organizational change.

Small pilots don't generate the organizational attention, resource commitment, or cultural shift required for lasting adoption. They prove that AI can work technically while avoiding the harder questions: Can our organization absorb this? Are our people ready? Do our processes support it? Is our culture compatible?

Comprehensive initiatives force these questions. They require visible leadership engagement. They demand cross-functional coordination. They create the organizational muscle that sustains adoption beyond the initial implementation.

This doesn't mean boiling the ocean. It means being honest about what AI adoption actually requires—treating it as the organizational transformation it is, not the software upgrade you might wish it were.

Create Psychological Safety for Experimentation

Organizations with smooth AI implementations share a distinctive cultural trait: they actively encourage employees to experiment with AI capabilities. Organizations struggling with implementation actively discourage exploration.

This difference in experimentation culture represents the single most significant factor distinguishing AI adoption success from failure in Prosci's research.

When employees feel safe to try AI tools, make mistakes, and learn publicly, adoption accelerates naturally. When they feel that errors will be noticed, judged, or punished, they retreat to familiar behaviors where they know they can succeed.

Creating psychological safety requires more than announcing that "it's okay to make mistakes." It requires leaders who visibly experiment and share their own learning curves. It requires systems that don't punish exploratory behavior. It requires genuine tolerance for the inefficiency that learning always involves.

What Successful AI Adoption Actually Looks Like

McKinsey's research identified what separates the 6% of high-performing organizations from the rest. The patterns are consistent and replicable.

Visible, Engaged Leadership

High performers are three times more likely than their peers to have senior leaders who demonstrate genuine ownership of AI initiatives. Not delegation. Not sponsorship. Ownership.

This means CEOs who engage directly with AI governance decisions. Boards that receive and discuss AI metrics. Executive teams that use AI tools themselves rather than delegating to subordinates.

Microsoft's 2025 AI adoption study found that 100% of organizations at the advanced stage of AI adoption had leadership that clearly communicated AI's importance and fostered collaboration. Not most organizations—all of them.

When leaders treat AI as strategically important, that signal cascades through the organization. Resources follow. Attention follows. Behavior follows.

Workflow Redesign, Not Overlay

The single biggest factor affecting financial impact from AI isn't the technology itself—it's whether organizations redesign workflows rather than just layering AI onto existing processes.

Adding AI tools to existing workflows typically produces marginal gains at best. The old process wasn't designed for AI capabilities. The handoffs don't work cleanly. The human steps that made sense before AI now create bottlenecks.

Organizations that capture real value rethink workflows from scratch with AI capabilities in mind. They ask: if we were designing this process today, knowing what AI can do, how would we structure it? This often means eliminating steps, changing roles, redefining handoffs—genuine redesign rather than tool addition.

This is harder than overlay. It requires deeper engagement with how work actually happens. It disrupts existing patterns that people have optimized over years. It demands organizational change, not just technology change.

Transformative Ambition, Not Just Efficiency

While 80% of all organizations cite efficiency as their AI objective, high performers are significantly more likely to also pursue growth and innovation. They're not just trying to do the same things cheaper. They're asking how AI enables different things entirely.

This ambition shapes everything downstream. When AI is about efficiency, success means cost reduction—and employees correctly perceive threat. When AI is about growth and innovation, success means expansion—and employees can see how AI creates opportunity.

The organizations capturing the most value from AI are using it to enter new markets, serve customers differently, create new products and services. They're treating AI as a strategic capability, not a cost optimization tool. (For more on measuring value beyond cost savings, see Beyond the ROI Question: Measuring AI's Human-Centered Value.)

Integration Across Functions

High performers integrate AI strategy across organizational functions rather than isolating it in IT or a dedicated AI team.

This means AI governance that includes operations, HR, finance, and business units—not just technology leaders. It means implementation teams with change management expertise alongside technical skills. It means treating AI adoption as an organizational initiative rather than a technology project.

The cross-functional nature of successful AI adoption reflects the cross-functional nature of the challenges. Technology alone can't solve fear, fatigue, or leadership gaps. You need human expertise alongside technical expertise, organizational skills alongside implementation skills.

A Framework for Human-Centered AI Adoption

Based on our work with mid-market companies—organizations with 50 to 5,000 employees who need AI transformation without the timelines and budgets of Big 4 engagements—we've developed an approach that addresses the 63% directly.

Phase 1: Understand Before You Implement

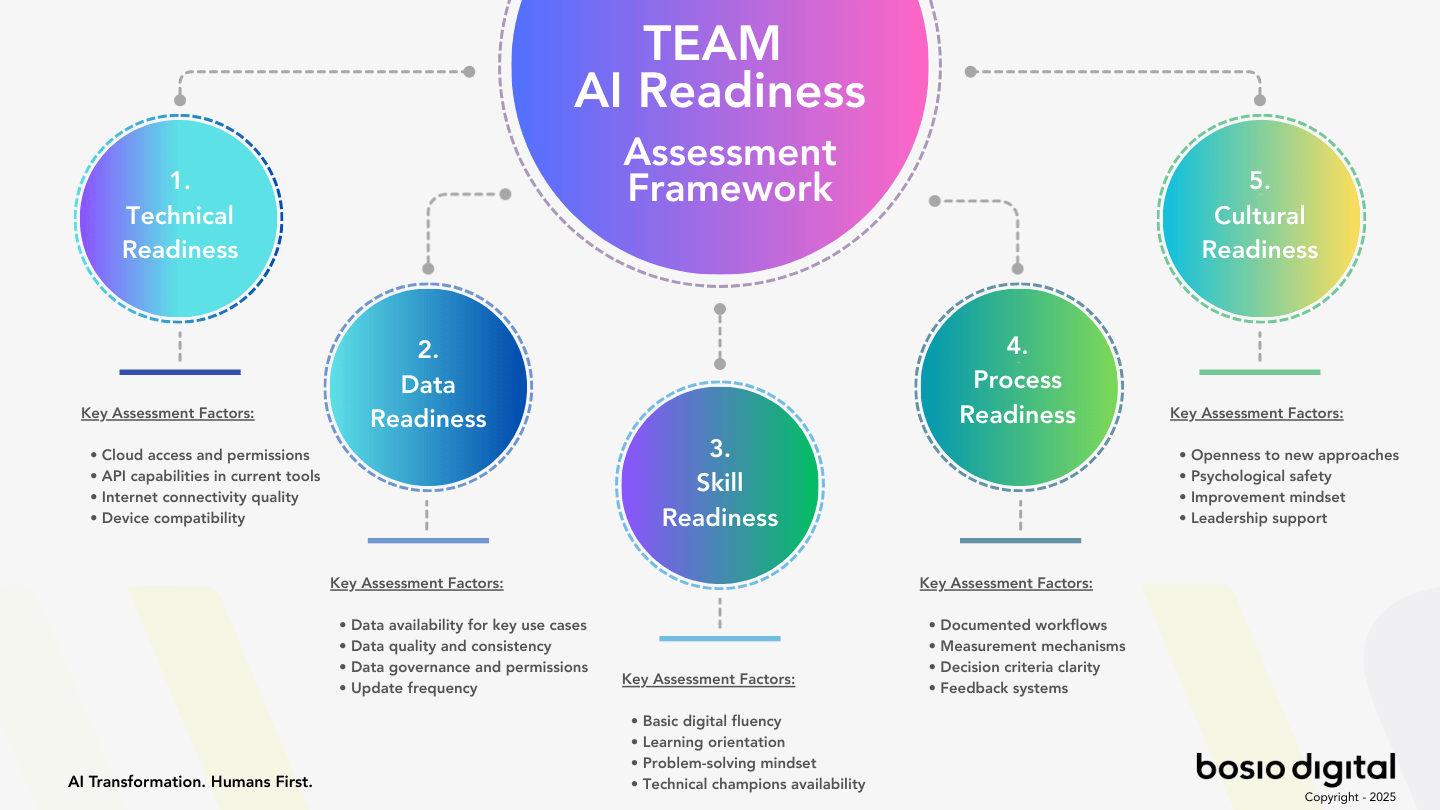

Before selecting any technology, assess your organizational readiness across five dimensions. (For a detailed assessment framework, see Is Your Business Ready for AI?)

Strategy & Vision: Is there genuine alignment on why AI matters for your organization? Not AI in general—AI for you specifically. What problems will it solve? What opportunities will it enable? Is leadership aligned on these answers?

Data & Infrastructure: Can you actually feed the systems you're planning to implement? Where does your data live? How clean is it? What integration work is required?

Technical Capabilities: Do you have the skills to implement and maintain AI systems? If not, how will you acquire them—through hiring, partnerships, or development?

People & Culture: What's the real appetite for change in your organization? How much change capacity remains? What's the history of technology adoption? Where will resistance likely emerge?

Governance & Ethics: How will you make decisions about AI responsibly? What oversight structures exist? How will you handle the ethical dimensions of automation?

Most organizations skip directly to technical capabilities and data infrastructure. The ones that succeed spend substantial time on people, culture, and leadership alignment before touching technology.

Phase 2: Discover Use Cases Through Collaboration

The best AI use cases don't come from consultants or technology vendors. They come from the people doing the work every day. (For inspiration on where to start, see High-Impact, Low-Complexity: 15 Most Valuable AI Use Cases.)

Cross-functional workshops that surface pain points, map inefficiencies, and brainstorm solutions generate better use cases than top-down analysis. People who live with processes daily understand their friction points in ways that external observers can't.

But collaborative discovery does more than improve use case quality. It builds ownership. People support what they help create. Employees who participated in identifying AI opportunities feel investment in making those implementations succeed.

This discovery process typically surfaces 30-50 initial ideas, which refinement reduces to 15-25 viable opportunities. Priority frameworks help identify which opportunities offer quick wins—high value, low complexity—versus strategic bets that require more investment.

Phase 3: Build Change Capacity Alongside Technology

For every technology implementation milestone, there should be a corresponding change management milestone:

When pilots launch, champion networks should be trained and active. When integrations complete, communication strategies should be in market. When rollouts begin, training programs should be delivering. When optimization starts, feedback loops should be functioning.

The change work isn't parallel to the technology work—it's integrated with it. Treating them as separate workstreams creates the gaps where adoption fails.

This integration requires different skills than most technology implementations involve. Change management, organizational development, communication strategy—these capabilities need to be present alongside technical implementation skills.

Phase 4: Design Workflows That Elevate

Every AI workflow should answer one fundamental question: Does this make people's work more meaningful?

If AI is adding steps, creating oversight burdens, or automating the interesting parts while leaving the tedious ones, adoption will suffer. The workflow might be technically impressive while being experientially worse for the humans involved.

Design for elevation means thinking carefully about what work AI should handle versus what work humans should retain. Generally, AI should absorb the repetitive, the routine, and the high-volume—freeing humans for judgment, creativity, and relationship.

The test is simple: After AI implementation, do employees feel their jobs got better or worse? Are they doing more meaningful work or less? Are they more engaged or more frustrated?

Why This Matters Now

The window for thoughtful AI adoption is narrowing.

Early movers who get this right are building organizations where AI amplifies human capability—where employees embrace rather than resist, where adoption accelerates rather than stalls, where AI becomes genuine competitive advantage rather than expensive distraction.

Organizations that get it wrong face a different trajectory. They'll join the 42% abandoning their initiatives, having spent millions on technology that sits unused while competitors pull ahead. They'll watch their best employees—the ones with options—leave for organizations that implement change more thoughtfully.

The 63% statistic isn't a problem to solve with better technology. It's a signal that the entire approach needs to change.

AI transformation is fundamentally a human transformation. The organizations that thrive will be those that solve the human equation first—recognizing that successful AI adoption is a change management challenge that requires proven methodologies, not just better technology.

The technology will keep improving. The question is whether your organization will be ready to use it.

bosio.digital helps mid-market companies implement AI transformation that actually gets adopted. Our "Humans First" methodology combines 25+ years of digital transformation experience with deep expertise in how humans actually change.

Sources

- Prosci, "Why AI Transformation Fails," 2025

- McKinsey & Company, "The State of AI," November 2025

- Gartner, "Predicts 30% of Generative AI Projects Will Be Abandoned," July 2024

- Gartner, "Lack of AI-Ready Data Puts AI Projects at Risk," February 2025

- S&P Global Market Intelligence, Enterprise AI Survey, 2025

- RAND Corporation, "Why AI Projects Fail and How They Can Succeed," 2024

- BCG, "AI at Work 2025: Momentum Builds, but Gaps Remain"

- Microsoft, AI Adoption Study, 2025

- PwC, Global Workforce Hopes and Fears Survey, 2025