The Paradox That Should Change Your AI Strategy

Here's a statistic that reveals how fundamentally wrong most organizations get AI readiness:

Only 14% of mid-market organizations have achieved what researchers call "full data readiness." Yet 91% have already adopted generative AI in some form.

Read those numbers again. The vast majority of companies using AI today don't have "ready" data by traditional standards. They started anyway.

This isn't recklessness. It's recognition of a truth that the data readiness narrative obscures: waiting for perfect data is itself a form of failure.

The implications are significant. For every organization delaying AI adoption because their "data isn't ready," there's a competitor moving forward with equally messy data—and learning faster because of it. The companies capturing AI value aren't the ones with pristine databases. They're the ones who stopped waiting for conditions that may never arrive.

BCG's research makes the case starkly: 74% of companies struggle to achieve and scale value from AI initiatives. But the primary culprit isn't bad data. It's paralysis. Companies that allocate 50-70% of their timeline to data preparation before touching AI consistently underperform companies that improve data quality while implementing solutions.

The "data readiness" conversation has become the most expensive form of procrastination in business today.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

The Fear Behind the Objection

Walk into almost any mid-market company considering AI, and you'll hear variations of the same conversation:

"We'd love to move forward with AI, but our data isn't ready."

"We need to clean up our CRM before we can do anything meaningful."

"Maybe next year, once we've sorted out the data infrastructure."

These statements sound reasonable. They sound prudent. They're also, increasingly, how companies talk themselves out of competitive advantage.

The data quality concerns aren't fabricated. Customer records really are scattered across multiple systems. Product information really does live in spreadsheets that haven't been updated since 2019. The CRM really has been "creatively" used for years in ways that would horrify any data architect.

But here's what the research reveals: these conditions describe nearly every organization. The companies succeeding with AI don't have notably better data. They have a different relationship with imperfection.

What "Data Readiness" Actually Means (And What It Doesn't)

Let's be honest about what's happening when leaders say "our data isn't ready."

Sometimes it's a genuine technical assessment. More often, it's a socially acceptable way to express deeper concerns: fear of failure, uncertainty about where to start, worry about disrupting existing processes, or simply not knowing what AI implementation actually requires.

These are legitimate concerns. But they're people concerns, not data concerns. And they deserve to be addressed directly rather than hidden behind technical-sounding objections.

So what does data readiness actually require? The answer is far less demanding than the industry would have you believe.

What you actually need to start:

- Data that's "good enough" for a specific use case (not perfect across all systems)

- Basic access controls so you know who can see what

- Someone who understands both the business problem and the data available

- Willingness to learn and iterate

What you don't need:

- A fully integrated data warehouse

- Complete data cleansing across all systems

- A team of data scientists

- Enterprise-grade AI infrastructure

- Perfect data governance frameworks

The distinction matters enormously. The first list describes starting conditions. The second describes a mature state you build toward over time—ideally, while you're already getting value from AI.

The Real Barriers Hiding Behind "Data Readiness"

When companies dig into why AI projects actually fail, a surprising pattern emerges. According to BCG's analysis of AI implementations across industries, 70% of AI challenges stem from people and process issues. Only 10% come from algorithms or data science problems.

Let that sink in. The overwhelming majority of AI failures have nothing to do with data quality.

What actually derails AI initiatives?

Change resistance. Employees who weren't brought along, who fear replacement, who see AI as threat rather than tool. This is a human challenge, not a data challenge. Understanding how to orchestrate organizational change matters far more than data cleanliness.

Unclear use cases. Organizations that implement AI because they feel they should, without identifying specific problems worth solving. Knowing which use cases actually deliver value is a strategy challenge, not a data challenge.

Missing champions. Projects that lack someone who bridges the gap between technical possibility and business reality. This is a leadership challenge, not a data challenge.

Governance gaps. Uncertainty about who makes decisions, who's accountable, what's allowed. Having a clear AI governance framework is an organizational challenge, not a data challenge.

Expectation mismatches. Leaders who expect magic, employees who expect perfection, timelines that assume no learning curve. This is a communication challenge, not a data challenge.

Notice what's not on this list? "We had clean data but the AI still failed."

The data readiness narrative has become a convenient distraction from the harder work of organizational change. It's easier to point at messy CRM records than to admit you don't know how to get your sales team excited about new tools. It's simpler to request another data audit than to figure out which use cases actually matter for your business.

The Companies Getting It Right

Research from MIT examining 300 enterprise AI initiatives found a striking pattern. Companies that succeed with AI share common characteristics—and pristine data isn't among them.

They start narrow. Rather than attempting enterprise-wide AI transformation, successful companies identify one specific, well-defined problem. They choose use cases where data quality is "good enough"—not perfect, but sufficient. They prove value in weeks, not years.

They partner rather than build. MIT's research found that 67% of AI implementations using solutions from specialized vendors succeed, compared to only 33% of internally-built solutions. The instinct to create something custom often adds complexity without adding value.

They invest in people first. Vanguard Group attributed $500 million in AI ROI partly to universal AI training—50% of employees completed their AI Academy. The companies winning with AI treat capability building as a prerequisite, not an afterthought.

They improve data alongside implementation. This is crucial. The most successful organizations don't wait for perfect data. They start with what they have, learn what data quality actually matters for their use cases, and improve targeted areas based on real experience rather than theoretical requirements.

One manufacturing company started using AI to analyze equipment maintenance logs—data that lived in inconsistent formats across three systems. Rather than spending months standardizing everything first, they began with a single production line. The AI flagged patterns they'd missed, predicted two equipment failures that would have caused costly downtime, and generated immediate ROI. Now they're systematically cleaning data across other lines, with clear justification for the investment and practical understanding of what quality means for their specific needs.

The "Good Enough" Principle

Here's what twenty-five years of digital transformation work has taught us: the pursuit of perfection is often the enemy of progress.

This doesn't mean quality doesn't matter. It means quality is contextual. The data standards for a financial trading algorithm are different from those for customer email drafts. The accuracy requirements for medical diagnosis differ from those for meeting summaries.

Successful organizations ask different questions than struggling ones:

Struggling organizations ask: "Is our data ready for AI?"

Successful organizations ask: "What's the minimum data quality needed for this specific use case to deliver value?"

Struggling organizations ask: "How do we clean all our data?"

Successful organizations ask: "Which data matters most for the problem we're solving first?"

Struggling organizations ask: "When will we be ready to start?"

Successful organizations ask: "What can we start with today, and what will we learn by starting?"

The shift from abstract readiness to specific sufficiency changes everything. It transforms an indefinite delay into a concrete decision. It replaces paralysis with action.

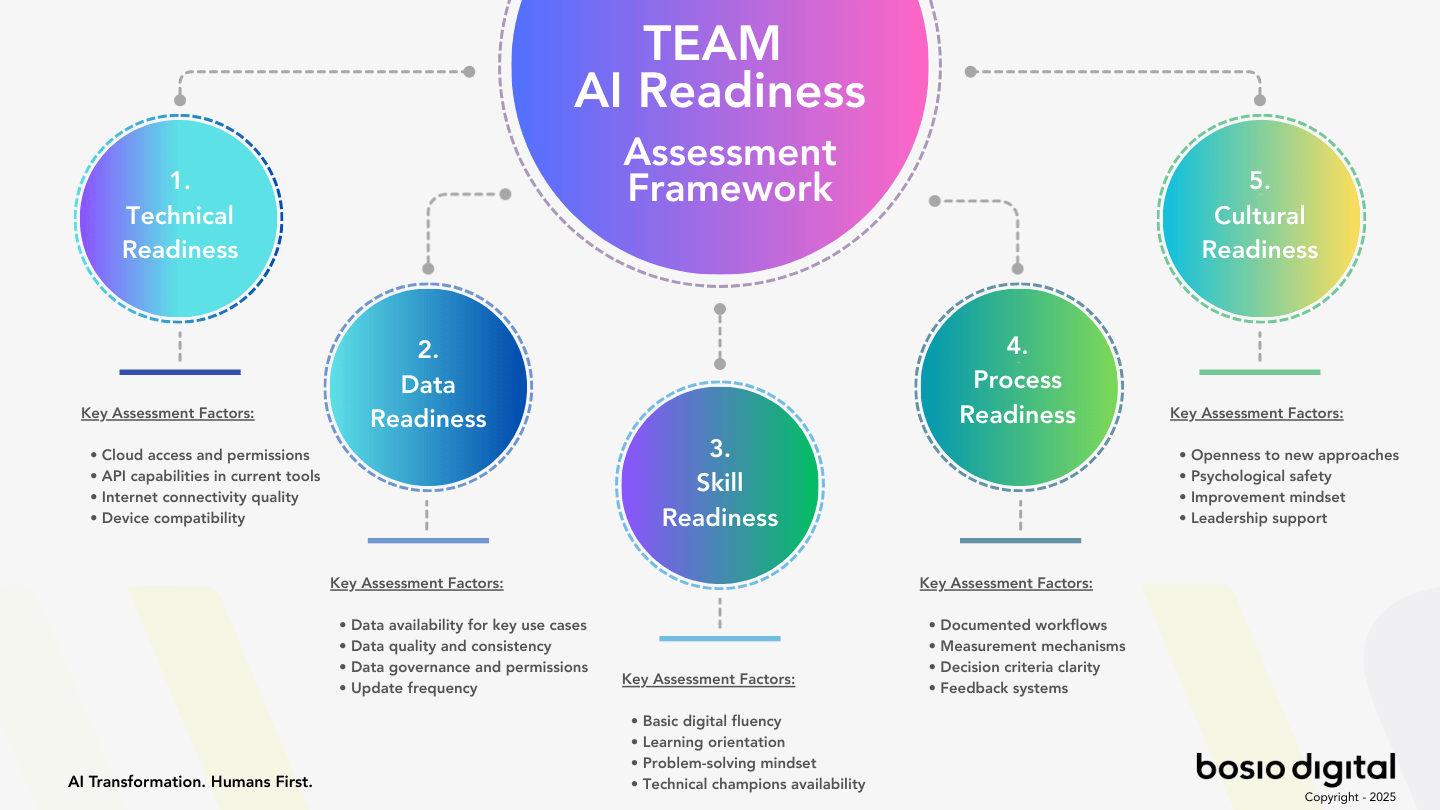

A Framework for Realistic Data Assessment

If you're wondering whether your organization can start with AI, here's a practical framework. It takes about an hour and replaces months of analysis paralysis. (For a more comprehensive evaluation, see our AI readiness assessment guide.)

Step 1: Identify One Problem Worth Solving

Not "implement AI." One specific business problem where AI might help. Customer response times. Document summarization. Lead qualification. Equipment maintenance prediction. Keep it narrow.

Step 2: Map the Data You'd Need

For that one problem, what data would an AI system need? Usually, this is far less than you'd expect. A customer response AI needs access to past tickets and responses—not your entire data warehouse.

Step 3: Assess Availability, Not Perfection

Can you access that data? In what form? Don't ask "is it perfect?" Ask "is it usable?" If customer tickets exist in your support system, even inconsistently categorized, that's a starting point.

Step 4: Identify the 20% That Matters

Within your data, what quality issues would actually break the use case versus merely reduce its effectiveness? A product description AI can work with inconsistent formatting. It can't work with descriptions that are completely wrong. Focus cleanup efforts on what genuinely matters.

Step 5: Define "Good Enough" Criteria

What accuracy would make this AI useful? It doesn't need to be perfect. If AI-drafted emails need human review and editing, 80% accuracy might be transformative. You're not building autonomous systems—you're building tools that augment human judgment.

Step 6: Run a Pilot

Not a proof of concept that lives in a sandbox forever. A real pilot with real data, real users, and real outcomes to measure. Two weeks. One team. One problem.

This framework typically reveals that organizations are far more ready than they believed. The data exists. It's messy but usable. The real work is selecting the right starting point and committing to learn.

The Cost of Waiting

While companies wait for data perfection, the competitive landscape shifts beneath them.

Customer expectations evolve. Your competitors using AI are responding faster, personalizing better, and solving problems more efficiently. Customers don't know it's AI—they just know the experience is better. When they come to you, the contrast is noticeable.

Talent migrates. The best people want to work with modern tools. They want organizations that embrace innovation, not ones mired in perpetual preparation. Every month of waiting is a month your best employees wonder if they're in the right place.

The gap widens. AI implementation isn't just about the tool—it's about organizational learning. Companies starting now are building capabilities, developing intuition, making mistakes and learning from them. Companies waiting are falling further behind in ways that data cleansing won't solve.

Costs increase. Gartner found that total cost of ownership for AI initiatives often exceeds initial expectations by 40-60%. But here's the thing: those costs don't decrease by waiting. They increase. Because while you wait, you're not building internal expertise, not learning what works for your specific context, not developing the organizational muscles that make implementation smoother. (Understanding how to measure AI ROI helps make the case for starting now.)

The data readiness delay isn't neutral. It's actively harmful.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

What Actual Preparation Looks Like

We're not suggesting organizations throw caution to the wind and implement AI randomly. Thoughtful preparation matters. It just looks different from the "clean all data first" approach that keeps companies stuck.

Identify your AI champion. Not necessarily a technical person. Someone who understands business processes deeply, can bridge communication between teams, and has the organizational credibility to drive change. This person matters more than your data quality.

Build basic governance. Who decides which AI tools to use? Who's accountable for outcomes? What data can be used for what purposes? These don't need to be elaborate frameworks—they need to be clear enough to enable decision-making.

Prepare your people. This is where most organizations underinvest. Broad-based AI literacy training. Clear communication about how AI will affect roles. Psychological safety to experiment and fail. The companies achieving ROI from AI have treated this as foundational.

Select the right first problem. Not the most important problem. Not the most complex problem. The right first problem: narrow enough to execute quickly, valuable enough to justify attention, low-risk enough to survive imperfect execution, and visible enough to build momentum.

Plan for iteration, not perfection. Your first AI implementation won't be perfect. That's not failure—that's learning. Build in review cycles. Expect to adjust. Treat the pilot as an experiment that generates insight, not a test you need to pass.

This preparation typically takes 2-4 weeks, not 18 months. It addresses the actual barriers to success while maintaining momentum.

The Human Side of Data Readiness

Here's what we've observed after helping dozens of organizations navigate AI adoption: the data readiness conversation is often a proxy for deeper organizational anxiety.

Leaders worry about making expensive mistakes. Employees worry about their jobs. IT teams worry about security. Everyone worries about what happens when things go wrong.

These fears are valid. They deserve attention. But they don't get resolved by cleaning data. They get resolved by engaging with them directly.

When we work with organizations, we spend as much time on psychological readiness as technical readiness. Not because we're soft-hearted consultants who care about feelings—because the research is unambiguous: organizational factors determine AI success far more than technical factors.

Companies that position AI as a tool that elevates human work, rather than replaces it, see dramatically higher adoption. Teams that feel involved in AI decisions, rather than subjected to them, engage more constructively. Leaders who acknowledge uncertainty while maintaining direction build more trust than those who promise false certainty.

The "Humans First" principle isn't just ethics. It's effectiveness.

Starting This Week

If you've been waiting for data readiness, here's a challenge: what could you start this week?

Not a massive initiative. Not a complete transformation. One small experiment.

Could you use an AI tool to draft customer communications that humans review and send? Could you analyze one month of support tickets to identify patterns? Could you summarize lengthy documents for faster review?

None of these require perfect data. All of them generate learning. Each one moves you from theoretical readiness to practical capability.

The companies that will lead in the AI era aren't the ones with the cleanest data. They're the ones who started before they felt ready, learned faster than their competitors, and built organizational capabilities that compounded over time.

Your data will never be perfect. But it's probably good enough to begin.

The question isn't whether you're ready. It's whether you're willing to start.

Sources

- Analytics8. "Solving the Data Readiness Conundrum: Best Practices for Excelling with AI and Advanced Analytics." (2024)

- Boston Consulting Group. "AI Adoption in 2024: 74% of Companies Struggle to Achieve and Scale Value." BCG Press Release, October 2024.

- Cherre Research. "CRE and AI Readiness." (December 2025)

- PwC. "Cloud and AI Business Survey: How Top Performers Achieve AI Advantages." (2024)

- BARC. "Lessons From the Leading Edge: Data Quality as Top AI Challenge." (2025)

- MIT Sloan Management Review. "Scaling AI Results: Strategies from MIT Sloan." (2025)

- Informatica. "CDO Insights: The Surprising Reason Most AI Projects Fail." (2025)

- McKinsey & Company. "The State of AI in 2024: Generative AI's Breakout Year." (2024)

- Cisco. "AI Readiness Index: How Organizations Are Preparing for AI." (2024)