Aligned Incentives, Redesigned Processes, AI-Ready Culture

What separates the 5% of AI initiatives that succeed from the 95% that stall?

It's not better algorithms. It's not bigger budgets. It's not earlier adoption.

It's what they build before they deploy.

Harvard Business School professors recently identified what successful AI transformations have in common: "organizational scaffolding to bridge technical potential and business impact." Technology enables progress, they noted, "but without aligned incentives, redesigned decision processes, and an AI-ready culture, even the most advanced pilots won't become durable capabilities."

There it is. The blueprint. Three pillars that determine whether AI adoption succeeds or fails:

- Aligned incentives — Do the people expected to adopt AI have reasons to adopt it?

- Redesigned processes — Have you changed how work flows, not just added AI to existing workflows?

- AI-ready culture — Is your organization psychologically prepared for this change?

Most companies invest heavily in AI technology and barely at all in these three pillars. Then they wonder why adoption stalls, pilots don't scale, and the 80% failure rate keeps holding.

This is the blueprint for building organizations where AI adoption actually works.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

Why the Foundation Always Gets Skipped

Before we build, let's understand why most organizations skip this work entirely.

The Visibility Problem

Technology is tangible. You can demo it. Screenshot it. Show the board a working prototype. Executives love funding things they can see and measure.

Organizational scaffolding is invisible. Culture doesn't fit on a slide. Incentive alignment doesn't have a dashboard. Process redesign looks like meetings and documents, not progress.

So when budgets get allocated, the visible technology gets funded. The invisible foundation gets a line item that reads "change management" with 5% of the budget and no executive sponsor.

The Timeline Pressure

Competitors are moving. The board is asking about AI strategy. There's genuine urgency.

Building scaffolding takes time. It requires conversations, assessments, and organizational work that feels slow compared to deploying technology.

So companies skip it. "We'll figure out adoption after we deploy." This feels faster. It costs more.

The RAND Corporation's research found that AI projects fail at twice the rate of traditional IT projects—not because the technology is harder, but because the organizational complexity is greater. Skipping the foundation doesn't save time. It guarantees you'll spend that time later on failed adoption, rework, and rebuilding trust.

The Expertise Gap

Technical teams know how to build AI systems. They typically don't know how to build organizational readiness.

So change management gets assigned sideways—to HR as a communications exercise, to training as a curriculum problem, to project management as a checklist item. Nobody owns it as the strategic priority it actually is.

When scaffolding is everyone's side project, it's nobody's main project. And it doesn't get built.

The Incentive Irony

Here's the irony: the same incentive misalignment that kills AI adoption also kills investment in fixing incentive misalignment.

Project managers are measured on deployment timelines, not adoption rates. Vendors are measured on implementation milestones, not business outcomes. The people accountable for "AI success" often aren't accountable for the organizational scaffolding that creates success.

So scaffolding becomes an afterthought. And the 80% failure rate continues.

Pillar 1: Aligned Incentives

The first pillar is the most overlooked: do the people expected to adopt AI have any reason to adopt it?

The Misalignment Nobody Talks About

In most AI initiatives, there's a fundamental conflict that nobody addresses directly:

Leadership incentives: Efficiency gains. Cost reduction. Doing more with less. Sometimes, explicitly or implicitly, headcount reduction.

Employee incentives: Job security. Professional identity. Manageable workload. Career progression.

These are often in direct opposition. Leadership sees AI as a way to improve margins. Employees see AI as a threat to their livelihood.

Everyone knows this tension exists. Almost nobody addresses it openly. And then organizations are surprised when employees don't enthusiastically adopt tools they perceive as designed to eliminate their jobs.

What "Aligned Incentives" Actually Means

Incentive alignment isn't about convincing employees that what's good for the company is good for them. That's spin, and people see through it.

Real alignment means designing the AI initiative so that adoption genuinely benefits employees—and making that benefit visible and credible.

"AI will help the company" is not a motivator. Companies benefit from lots of things that don't benefit employees.

"AI will make your job better" can be a motivator—if it's true and if employees believe it. This requires specificity. Which parts of the job get better? How? What evidence supports this?

"AI will make you more valuable" is the strongest motivator—if the career path is clear. Employees who develop AI collaboration skills should have visible advancement opportunities. If AI proficiency is rewarded, adoption follows.

Practical Alignment Strategies

Tie adoption to career development, not just efficiency metrics. Create "AI-augmented" role progressions. Make AI proficiency a factor in promotions and compensation. Signal that the organization's future includes humans who are excellent at working with AI—not humans replaced by AI.

Solve employee pain points first. The first AI use cases shouldn't be the ones that most excite leadership. They should be the ones that most relieve employee frustration. When AI eliminates the parts of work that people hate, adoption happens naturally.

Share productivity gains. If AI saves someone 10 hours a week, what happens to those 10 hours? If the answer is "more work at the same pay," the incentive to adopt is weak. If the answer is "higher-value work, professional development, or improved work-life balance," the incentive is real.

Reward adoption behaviors, not just outcomes. In early stages, measure and reward experimentation, feedback, and engagement—not just productivity gains. This creates safety for the learning curve every new technology requires.

The Trust Prerequisite

None of this works without trust.

If employees have seen previous "efficiency" initiatives lead to layoffs, no amount of messaging about "AI as a tool to help you" will land. They've learned through experience that efficiency language is often elimination language in disguise.

Trust is built through action, not communication. What you do with the first AI wins—who benefits, whose work improves, what happens to the time saved—signals everything about what comes next.

If leadership captures all the gains from early AI adoption, employees learn that adoption benefits the company at their expense. If employees capture visible gains—better work, new skills, advancement opportunities—they learn that adoption benefits them.

Incentive alignment starts with trust. And trust starts with demonstrable action that employees benefit from AI adoption.

Pillar 2: Redesigned Processes

The second pillar addresses how work actually flows: you can't just add AI to existing processes and expect transformation.

The Workflow Assumption

Most AI deployments make a dangerous assumption: existing workflows stay the same, and AI simply accelerates them.

"We'll add AI to what people already do."

This sounds reasonable. It's usually wrong.

AI doesn't just speed up existing work. It changes what's possible, what's necessary, and who does what. Bolting AI onto legacy processes creates friction, confusion, and duplication—not value.

If your expense reporting process has 12 steps designed for manual review, adding AI to step 7 doesn't optimize the process. It creates a hybrid that's worse than either fully manual or fully redesigned approaches.

What "Redesigned Processes" Actually Means

Process redesign for AI means stepping back and asking: given AI's capabilities, how should this work actually flow?

This requires mapping:

Where AI fits in the decision flow. Not just the task flow—the decision flow. Where does AI provide information? Where does it recommend actions? Where does it execute autonomously? Where must humans decide?

Human-AI handoffs. Clear transitions: when does AI output go to a human? When does human input trigger AI action? What information passes at each handoff? Who's accountable at each stage?

Removed steps. AI often makes steps unnecessary—approvals, reviews, manual checks that existed because humans couldn't process information at scale. If you don't remove these steps, people do both the old work and the new AI-assisted work. That's addition, not transformation.

Added steps. AI also creates new requirements: human review of high-stakes AI outputs, exception handling for edge cases, quality monitoring, feedback loops for continuous improvement. These steps need to be designed in, not discovered in production.

The Human-in-the-Loop Design Question

Every AI workflow requires explicit decisions about the human role:

Where must humans stay in the loop? High-stakes decisions. Ethical judgment calls. Relationship-critical moments. Situations where being wrong has significant consequences. Here, AI informs; humans decide.

Where can humans leave the loop? Routine, high-volume, low-variance work. Decisions where the cost of occasional AI errors is lower than the cost of human review at scale. Here, AI operates autonomously with periodic auditing.

Where should humans oversee the loop? Middle ground: AI executes, but humans spot-check, handle exceptions, and monitor for drift. Here, the human role shifts from doing the work to ensuring quality.

This mapping is organizational design, not technical design. It requires understanding your risk tolerance, your quality requirements, and the actual capabilities of both your AI systems and your people.

Most organizations don't do this mapping explicitly. They deploy AI, let informal practices emerge, and end up with inconsistent human-AI collaboration that varies by team, by individual, and by day.

The Process Debt Problem

Here's an uncomfortable truth: most organizations have significant process debt.

Processes built years ago for a world without AI. Approval chains that exist because of past failures. Workarounds that became institutionalized. Steps that nobody remembers the purpose of.

AI deployment is an opportunity to pay down this debt—to redesign processes for the current reality, not the historical one. But most companies don't take this opportunity. They're in too much of a hurry to deploy.

So they automate broken processes. And they get automated broken outcomes—faster.

The organizations that succeed treat AI deployment as a forcing function for process redesign. They ask: if we were building this workflow from scratch today, with AI as a given, what would it look like?

That question leads to transformation. Adding AI to existing workflows leads to expensive incremental improvement at best.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

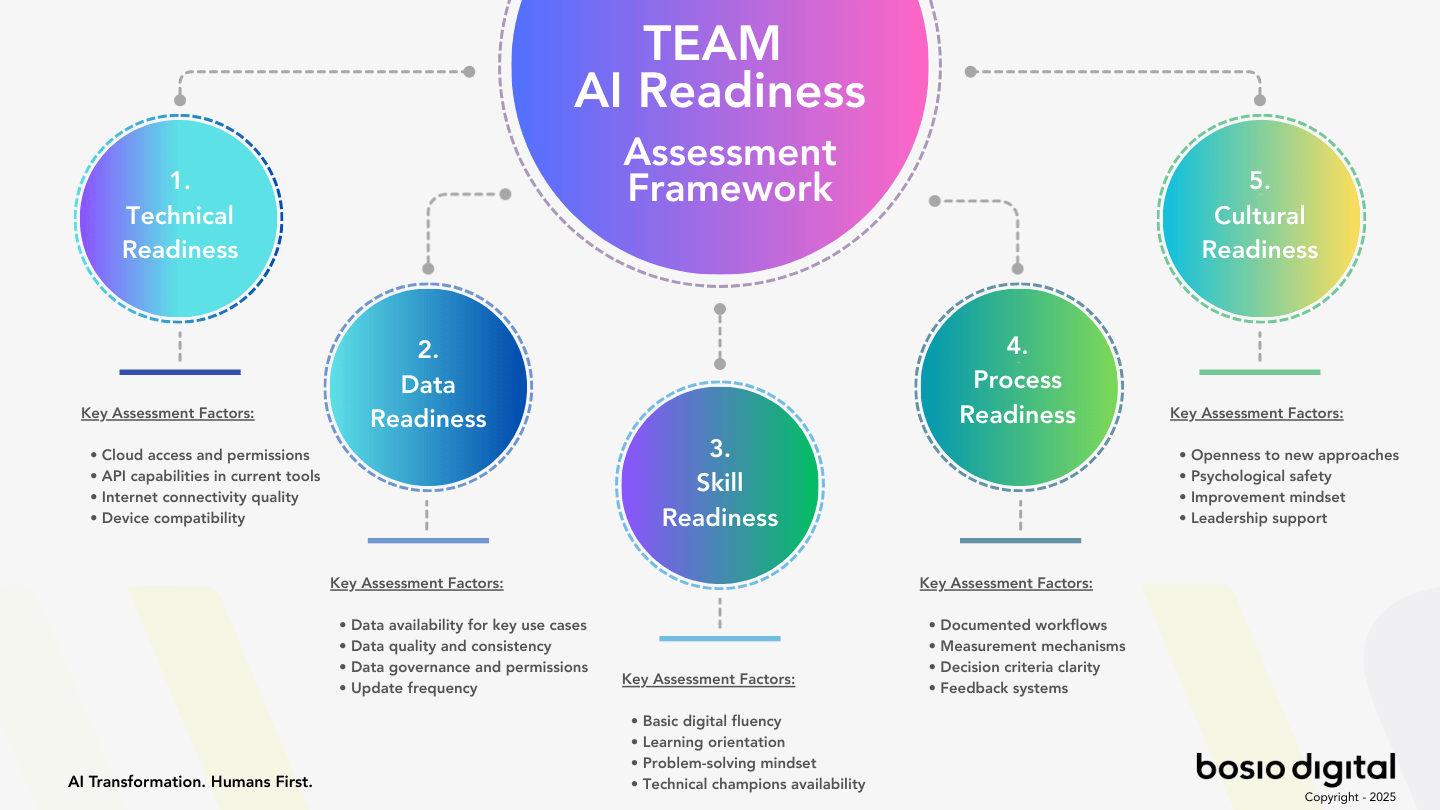

Pillar 3: AI-Ready Culture

The third pillar is the foundation that holds everything else up: organizational culture.

What "AI-Ready" Doesn't Mean

Let's clear away some misconceptions.

AI-ready doesn't mean "everyone is excited about AI." Excitement is nice; it's not necessary or sufficient. Plenty of successful AI adoptions happen in organizations where initial sentiment was skeptical.

AI-ready doesn't mean "everyone is trained on prompt engineering." Technical skills can be developed. Cultural readiness is harder to build and more important.

AI-ready doesn't mean "we have an AI use policy." Policies matter for governance. They don't create the cultural conditions for adoption.

What "AI-Ready" Actually Means

An AI-ready culture has five characteristics:

Psychological safety to experiment. People can try AI, make mistakes, and learn without punishment. They can say "I don't understand this" without losing status. They can report problems without being blamed for them.

Without psychological safety, people don't experiment. They either avoid AI entirely or use it in hidden ways that create risk. Neither leads to successful organizational adoption.

Comfort with uncertainty. AI outputs are probabilistic, not deterministic. The same prompt can produce different results. AI systems can be confidently wrong. This is uncomfortable for organizations built on predictability and control.

AI-ready cultures develop tolerance for "good enough" answers, iterative refinement, and outcomes that vary within acceptable ranges. They learn to work with uncertainty rather than demanding false precision.

Growth orientation. A belief that capabilities can develop—that people can learn new skills, roles can evolve, and change is opportunity rather than threat.

Fixed mindset organizations see AI as a judgment on current capabilities: some people are "good with AI" and some aren't, and that's that. Growth mindset organizations see AI proficiency as learnable by anyone willing to invest effort.

Trust in leadership intent. The belief—based on evidence, not just words—that leadership is deploying AI to elevate employees rather than eliminate them.

This trust doesn't come from messaging. It comes from consistent action over time. Organizations with a history of using technology transitions to improve employee experience have trust. Organizations with a history of using efficiency language to mask headcount reduction don't.

Collaborative relationship with technology. A mental model of AI as partner rather than replacement or master. Neither "AI will take my job" fear nor "AI will do my thinking for me" over-reliance.

This means seeing AI as a tool that extends capability—like a power tool that makes a skilled carpenter more productive without replacing the carpenter's judgment and craft.

How Culture Actually Changes

Here's what 25 years of working with organizational change has taught me: culture doesn't change through announcements, training programs, or policy updates.

Culture changes through repeated experiences that create new beliefs.

When someone experiments with AI, fails, and nothing bad happens—they update their belief about psychological safety. When a leader publicly says "I don't know how to do this yet" and asks for help—people update their belief about growth orientation. When the first AI efficiency gains result in better work rather than layoffs—employees update their beliefs about leadership intent.

Each experience is a data point. Enough data points in the same direction shift the culture.

This means culture change is led by action, not communication. What leaders do matters infinitely more than what they say. How the organization responds to early AI experiments signals what's actually valued.

Leaders must model the behavior. Using AI visibly. Admitting when they don't understand something. Asking questions publicly. Celebrating experiments regardless of outcome. The behavior at the top is the behavior that gets permission.

Early wins shape perception. The first AI deployments are cultural statements as much as technical implementations. If they succeed and employees benefit, the culture shifts toward openness. If they fail or only leadership benefits, the culture shifts toward resistance.

Peer influence outweighs hierarchy. People look to colleagues they trust more than executives they don't know. Champions and power users create cultural change by demonstrating what's possible in their immediate context.

The Patience Requirement

Culture change takes time. Real time. Months and years, not weeks.

This is where most AI initiatives go wrong. They want cultural transformation on a technology deployment timeline. "We need the organization to be ready by Q3 because that's when the system launches."

Culture doesn't work on project timelines. Rushed culture change isn't change—it's compliance theater. People learn to say the right things and perform the right behaviors while their actual beliefs remain unchanged.

Sustainable AI adoption requires sustainable culture investment. Starting the culture work early, before technology deployment. Continuing it long after deployment. Accepting that genuine transformation happens gradually.

Organizations that try to shortcut culture end up with surface-level adoption that reverts as soon as pressure shifts. Organizations that invest in culture end up with transformation that compounds over time.

Diagnosing Your Culture

Before you can build an AI-ready culture, you need to understand your starting point. Some diagnostic questions:

How does your organization respond when someone makes a mistake with new technology? Is there blame and punishment, or learning and adjustment? The answer predicts whether people will experiment with AI.

When's the last time a senior leader publicly said "I don't know"? If the answer is "I can't remember," your culture may not have the psychological safety that AI adoption requires.

How do employees talk about past technology changes? With enthusiasm, or with trauma? Previous technology transitions create templates for expectations. If past transitions were painful, employees expect this one to be painful too.

What happened to the last "efficiency initiative"? Did it benefit employees or only shareholders? Did the messaging match the reality? This history shapes trust in AI adoption messaging.

How quickly do informal workarounds emerge in your organization? This indicates whether people adapt to changing requirements (good for AI adoption) or rigidly follow outdated processes (bad for AI adoption).

The answers to these questions reveal where your culture already supports AI readiness and where work is needed.

How the Three Pillars Reinforce Each Other

These pillars aren't a checklist. They're a system.

The Interdependencies

Aligned incentives without redesigned processes gives people motivation with nowhere to go. They want to adopt AI, but the workflows don't support it. Friction overwhelms enthusiasm.

Redesigned processes without aligned incentives creates new ways of working that nobody follows. The workflows are theoretically better, but people have no reason to change their behavior.

Both without culture produces temporary compliance that reverts under pressure. People follow new workflows and respond to incentives while the program has attention. When leadership focus shifts, old patterns reassert themselves.

All three together creates durable transformation. People want to adopt (incentives). They have clear ways to adopt (processes). They believe adoption is safe and valued (culture). This is how the 5% succeed.

The Right Sequence

Sequencing matters:

Start with culture. Create psychological safety. Send trust signals through action. Make it clear that experimentation is valued and mistakes are learning opportunities. This creates the conditions where everything else can work.

Then align incentives. Once people feel safe, show them how adoption benefits them. Career paths. Reduced drudgery. New capabilities. Make the personal case clear and credible.

Then redesign processes. With safety established and motivation in place, introduce new ways of working. People who feel safe and see personal benefit will engage with process change rather than resisting it.

Technology deployment fits around this, not the other way around. The technical implementation should follow organizational readiness, not lead it. Deploy when the organization can absorb the change, not when the technology is ready.

Most companies reverse this sequence. They deploy technology first, then try to build processes around it, then wonder why incentives are misaligned, and never address culture because it's "soft stuff."

The reversed sequence is why 80% fail.

The Investment Ratio

Most organizations spend 90% of their AI transformation budget on technology and 10% on organizational scaffolding.

For the first six months, the ratio should probably be inverted. Or at least balanced: 50% on scaffolding, 50% on technology.

This feels counterintuitive. The technology is the point, right? Why spend so much on everything else?

Because the technology only creates value if people use it. And people only use it if the organization is ready. Scaffolding investment front-loads effort but eliminates the back-loaded cost of failed adoption, rework, and organizational damage.

The cost of scaffolding is visible: the consultants, the workshops, the time spent on organizational development. The cost of skipping scaffolding is invisible—until the failure becomes undeniable.

What This Looks Like in Practice

Let me make this concrete.

The Champion Model as Scaffolding Infrastructure

Our approach to AI transformation centers on building champion networks—not as a training program, but as organizational infrastructure.

Executive Champions provide visible sponsorship and incentive alignment at the top. When senior leaders visibly use AI, talk about their own learning curve, and tie adoption to strategic priorities, it signals cultural permission and creates safety.

Operational Champions own process redesign at the team level. They understand local workflows, identify where AI fits, and adapt general frameworks to specific contexts. They're also local culture carriers—demonstrating what AI collaboration looks like in practice.

Power Users create peer influence and grassroots momentum. They're colleagues helping colleagues, which builds trust that no training program or executive announcement can create.

This three-tier structure addresses all three pillars simultaneously. Champions model cultural change. They make adoption beneficial through peer support. They redesign processes in their local context.

It's not a program that runs for six weeks and ends. It's infrastructure that persists and develops.

The Phased Approach

Organizations that succeed build scaffolding before deploying sophisticated AI:

Phase 1: Foundation (4-6 weeks)

Culture assessment: Where are we starting? What's the trust level? What's the history with technology change?

Incentive mapping: What do employees actually care about? Where's the current misalignment? How can we design for real benefit?

Process documentation: How does work actually flow today? Where are the pain points? Where's the process debt?

Champion identification: Who are the natural early adopters? Who has peer influence? Who's skeptical but respected?

Phase 2: Small Deployments (6-12 weeks)

Deploy AI in limited, high-visibility contexts that demonstrate value. Choose use cases that solve employee pain points, not just leadership priorities.

Use these deployments to test and refine scaffolding. Are incentives actually aligned? Are processes actually workable? Is culture shifting?

Learn fast. Adjust faster. These early deployments are learning exercises as much as value creation.

Phase 3: Scale (Ongoing)

Expand deployment with scaffolding in place. Each new use case follows the same pattern: ensure alignment, design processes, attend to culture.

Scale through champions, not mandates. Let successful early adopters pull peers forward rather than pushing from above.

Continuous refinement. Scaffolding isn't a one-time build. It requires ongoing maintenance, adjustment, and investment.

Metrics That Matter

If you only measure adoption rates and efficiency gains, you'll optimize for adoption rates and efficiency gains—by whatever means necessary. That often means forcing adoption and pressuring performance, which creates the backlash that kills long-term success.

Better metrics:

Voluntary usage rates. Are people using AI because they have to, or because they want to? Mandatory usage without voluntary adoption indicates compliance, not transformation.

Employee sentiment. How do people feel about AI at work? Is sentiment improving or declining over time? Declining sentiment predicts future adoption problems.

Process adherence. Are new workflows actually being followed? Or have workarounds emerged? Workarounds indicate process design problems.

Discretionary improvement. Are people suggesting enhancements to AI workflows? Identifying new use cases? Teaching colleagues? This discretionary effort indicates genuine engagement, not mere compliance.

Career trajectory correlation. Are employees who develop AI proficiency advancing? If AI adoption doesn't correlate with career progress, the incentive signal is weak.

These metrics take more effort to track than simple usage statistics. They're also more predictive of sustainable success.

The Blueprint Summary

Here's the blueprint for AI-ready organizations:

Pillar 1: Aligned Incentives

- Identify and address the motivation gap

- Make adoption genuinely beneficial for employees

- Solve employee pain points before leadership priorities

- Build trust through action, not messaging

- Share productivity gains

Pillar 2: Redesigned Processes

- Don't bolt AI onto legacy workflows

- Map human-AI collaboration explicitly

- Design handoffs, oversight, and escalation

- Pay down process debt

- Remove unnecessary steps, add necessary ones

Pillar 3: AI-Ready Culture

- Create psychological safety to experiment

- Build comfort with uncertainty

- Develop growth orientation

- Demonstrate trustworthy leadership intent

- Model collaborative relationship with technology

The Integration:

- Build culture first, then align incentives, then redesign processes

- Invest at least 50% in scaffolding, especially early

- Deploy technology when the organization is ready, not when the technology is ready

- Use champions as infrastructure, not just training recipients

- Measure scaffolding health, not just adoption metrics

An Invitation

The 80% of AI projects that fail don't fail because of technology. They fail because they deploy technology without building the organizational foundation to support it.

The blueprint isn't complicated. Aligned incentives. Redesigned processes. AI-ready culture. Three pillars that determine whether your AI investment creates value or joins the expensive pile of stalled initiatives.

Most organizations skip this work because it's invisible, time-consuming, and requires expertise that technical teams don't have.

The organizations that do this work—that build the scaffolding before they deploy—are the 5% that succeed.

This is what "Humans First" looks like in structural form. It's designing the organization to support human adoption, not just deploying technology and hoping for the best.

The technology is ready. The question is whether you'll build the foundation that lets your organization actually use it.

Sascha Bosio and Laura Pretsch are the co-founders of bosio.digital, an AI transformation consultancy specializing in human-centered adoption. With 25+ years of digital transformation experience alongside deep expertise in human development and organizational change, they help mid-market companies build the organizational foundation for AI that actually works. Their "Humans First" methodology has achieved adoption rates of 70%+ and employee satisfaction scores of 80%+ in AI implementations across industries.

Resources & References

Research Studies:

- RAND Corporation (August 2024) — "The Root Causes of Failure for Artificial Intelligence Projects and How They Can Succeed"https://www.rand.org/pubs/research_reports/RRA2680-1.html

- MIT NANDA Initiative (July 2025) — "The GenAI Divide: State of AI in Business 2025"https://fortune.com/2025/08/18/mit-report-95-percent-generative-ai-pilots-at-companies-failing-cfo/

Harvard Business Review Articles:

- "Most AI Initiatives Fail. This 5-Part Framework Can Help." — Ayelet Israeli, Eva Ascarza (November 2025)https://hbr.org/2025/11/most-ai-initiatives-fail-this-5-part-framework-can-help

- "Overcoming the Organizational Barriers to AI Adoption" — Jin Li, Feng Zhu, Pascal Hua (November 2025)https://hbr.org/2025/11/overcoming-the-organizational-barriers-to-ai-adoption

Related Reading:

- "AI Adoption. Humans First. The Manifesto." — Sascha Bosio, bosio.digital[Link to Manifesto on bosio.digital]