Question: How should executives transform their leadership for the AI age?

Quick Answer: Research from BCG shows that 65% of CEOs identify accelerating AI as a top-three priority, yet only 5% of organizations have achieved substantial financial gains from it. The difference between the 5% and everyone else isn't technology investment — it's leadership reinvention. "Future-built" companies that outperform by 3.6x in total shareholder returns invest up to 60% of their AI budgets on people (versus 27% for laggards), plan to upskill 50%+ of employees, and are fundamentally redesigning how leaders set context, make decisions, and orchestrate human-AI teams. The biggest transformation isn't happening to your workforce. It's happening to your job.

The 5% Problem

Here's a number that should stop every executive mid-strategy-session: only 5% of organizations have reaped substantial financial gains from AI.

Not 5% have started. Not 5% have experimented. Five percent have actually made money from it.

This comes from BCG's January 2026 global CEO survey — the same research that found 65% of CEOs now identify accelerating AI as a top-three business priority. The Conference Board's separate analysis confirms the tension: CEOs simultaneously rank AI as their leading investment priority, their greatest external risk, and their most urgent governance challenge.

That gap — between universal urgency and almost nonexistent returns — is the defining leadership challenge of 2026. And the uncomfortable truth is that it has very little to do with the technology itself.

We covered what's happening with AI agents in the OpenClaw wake-up call. We analyzed who's building these systems in the agent arms race between OpenAI, Anthropic, and Google. This article is about something more personal.

It's about you.

Because the biggest transformation isn't happening to your workforce, your tech stack, or your competitive landscape. It's happening to the way you — the person making decisions, setting direction, managing people — actually do your job. And if you haven't fundamentally changed how you work in the last twelve months, you're already behind the leaders who have.

Only 12% of CEOs say AI has delivered both cost and revenue benefits. The other 88% are investing heavily and getting nowhere meaningful. That's not a technology failure. That's a leadership failure. And fixing it starts with understanding exactly where most leaders are going wrong.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

Why Most Leaders Are Getting This Wrong

The pattern is remarkably consistent across mid-market companies. A CEO reads about AI disruption, calls a meeting, and says some version of: "We need an AI strategy." What happens next almost always follows the same script.

The CTO or IT director gets tasked with evaluating tools. A pilot project launches — usually something safe like document summarization or customer service chatbots. A vendor is selected. An implementation timeline is set. Six months later, the pilot works but nobody uses it. Twelve months later, the board asks about ROI and the answer is unclear. Eighteen months later, the initiative is quietly deprioritized.

BCG calls this the "bolt-on" approach — layering AI onto existing workflows without changing the underlying structure of how work gets done. And it explains why only 5% of organizations see real returns. You can't bolt a fundamentally different capability onto a fundamentally unchanged organization and expect transformation.

The companies getting this wrong share three common mistakes.

They delegate AI to technical teams. AI strategy is treated as an IT project. The CEO sets the budget, the CTO picks the tools, and leadership goes back to running the business the same way they always have. This misses the point entirely. As BCG's research on workforce transformation makes clear, 70% of AI value comes from rethinking the people component, not the technology. When you delegate AI to IT, you delegate 70% of the value to a team that doesn't control it.

They optimize without reinventing. As we explored in the reinvention question every business must answer, there's a massive difference between using AI to do existing work faster and using AI to rethink which work should exist at all. Most executives default to efficiency because it feels safe. But efficiency gains on expiring workflows don't compound — they evaporate.

They haven't changed their own workflows. This is the most telling signal. If you're asking your team to adopt AI but you haven't integrated it into your own daily decision-making, your organization reads the signal clearly: AI is a tool for the workers, not a priority for leadership. That signal is devastating to adoption.

The executives leading the 5% aren't just approving budgets. They're personally using AI agents to prepare for board meetings, pressure-test strategic assumptions, and accelerate their own decision cycles. They're modeling the behavior they're asking their organizations to adopt.

The Five Shifts Leaders Must Make

BCG's research, combined with the MIT Sloan Management Review's analysis of agentic AI leadership, reveals five fundamental shifts that separate the executives driving real AI value from those still waiting for technology to save them.

Shift 1: From command-and-control to context-setting.

The traditional leadership model assumes the leader knows the answer. You gather data, analyze it, decide, and direct your team to execute. AI inverts this model. When agents can process information faster than any human, the leader who tries to be the smartest person in the room becomes the bottleneck.

The new model is context-setting — and the leaders who do it best are the ones who build structured context that both humans and AI can use. You define the strategic constraints, the values, the boundaries — and then you create the conditions for human-AI teams to solve problems within that framework. TCS, which trained 576,000 employees in AI fluency and created "AI Fridays" for hands-on experimentation, discovered that the leaders who thrived weren't the ones with the best technical understanding. They were the ones who could articulate the clearest strategic context for their teams to operate within.

Shift 2: From annual planning to continuous adaptation.

The annual strategic planning cycle is dying. When AI agents can test market hypotheses in days instead of quarters, the competitive advantage shifts from having the best plan to having the fastest learning loop.

Brightstar Capital Partners built 30 AI agents on the OpenAI platform — including a "Red Team Agent" specifically designed to challenge executive conclusions and expose blind spots. Their approach isn't about replacing strategic thinking. It's about accelerating the cycle from assumption to evidence to decision. The leaders who still operate on annual planning cadences are making decisions with information that's already stale.

Shift 3: From tenure-based authority to learning velocity.

This one is personal, and it's uncomfortable. For decades, leadership authority came from experience — you knew more because you'd been there longer. AI disrupts this because it commoditizes historical knowledge. The person who's been in the industry for 25 years and the person who joined last month both have access to the same AI-powered insights.

The new currency of authority is learning velocity — how quickly you can absorb new capabilities, integrate them into your decision-making, and adapt your approach. BCG's research on future-built companies shows they plan to upskill 50% or more of their employees, compared to only 20% for laggards. That upskilling starts at the top. A CEO who can't use AI tools fluently can't credibly lead an AI transformation.

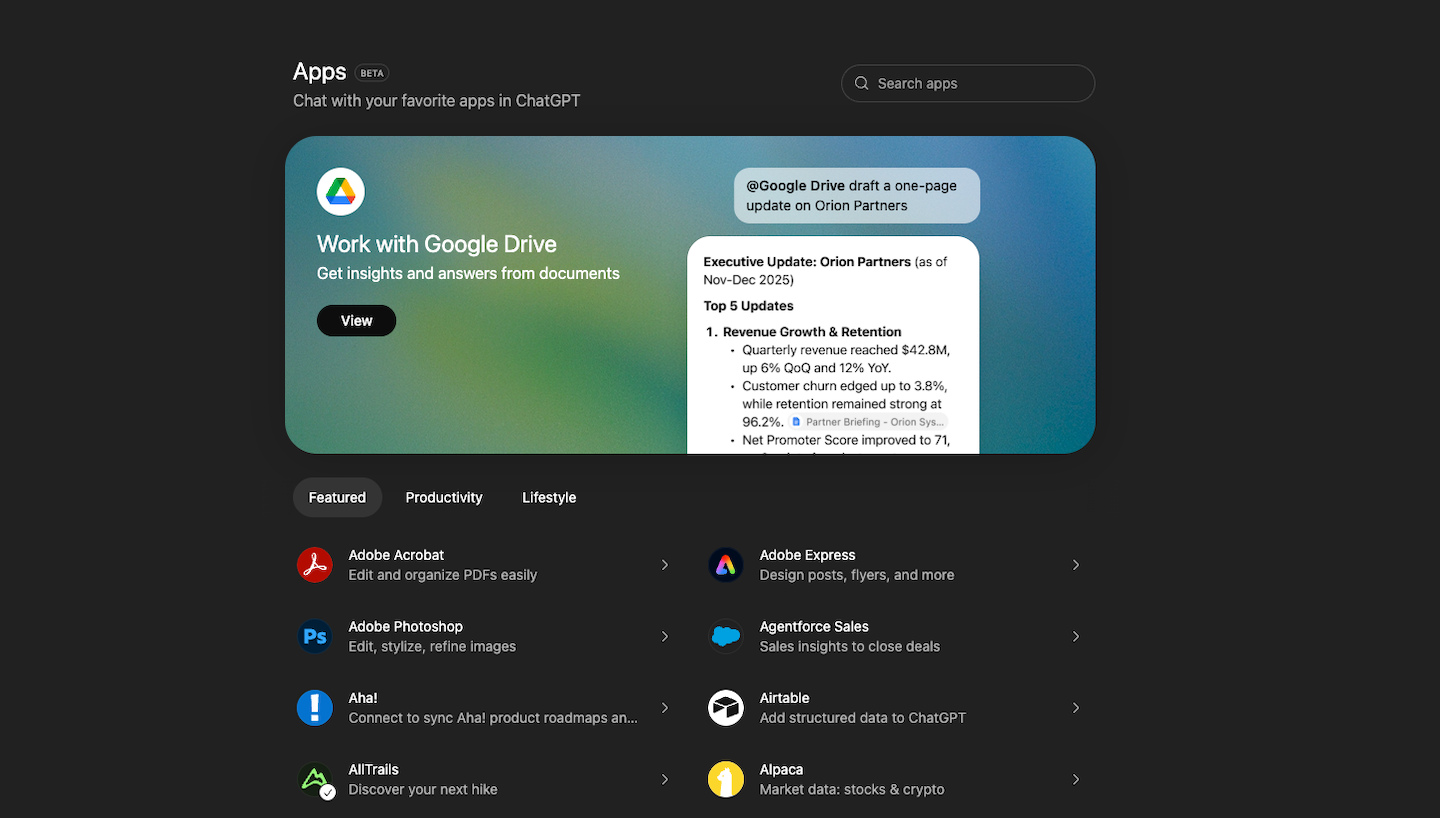

Shift 4: From managing people to orchestrating human-AI teams.

Here's a statistic that reframes the entire leadership role: 76% of executives now view agentic AI as a coworker, not a tool. AI decision-making authority is expected to grow 250% over the next three years among leading organizations.

This fundamentally changes what management means. You're not just managing human direct reports. You're orchestrating teams that include AI agents with real decision-making capability. The skills that made someone a great people manager — emotional intelligence, coaching, motivation — still matter, but they're insufficient. Leaders now also need to define decision rights for AI agents, design escalation frameworks, and monitor outcomes from systems that operate autonomously.

Shift 5: From protecting the status quo to perpetual reinvention.

The natural instinct of successful leaders is to protect what works. You built something that generates revenue and employs people — of course you want to preserve it. But BCG's data shows that the future-built companies outperforming by 3.6x in total shareholder returns, 1.7x in revenue growth, and 1.6x in EBIT margins are the ones that treat their own operating model as perpetually provisional.

This doesn't mean constant chaos. It means building an organizational capability for continuous redesign — where questioning the current model isn't threatening, it's expected.

Redesigning How Work Actually Gets Done

The five leadership shifts aren't abstract philosophy. They demand concrete structural changes to how your organization operates. And the data on what's coming is striking.

BCG and MIT Sloan's joint research on agentic AI leadership found that among organizations leading in agent deployment:

- 66% expect fundamentally redefined roles and operating models

- 58% anticipate changes to governance and decision-making rights

- 45% expect a reduction in middle management layers

- 29% expect fewer entry-level roles

- 43% are now open to hiring generalists over specialists

These aren't predictions about a distant future. These are the plans currently being implemented by the organizations seeing real AI returns.

The middle management finding deserves particular attention. If 45% of agentic AI leaders expect fewer middle management layers, the implication is clear: much of what middle managers do today — aggregating information upward, translating strategy downward, coordinating across teams — is precisely what AI agents do well. The middle managers who survive and thrive will be the ones who shift from information routing to judgment, coaching, and complex problem-solving that agents can't handle.

For your organization, this means rethinking three things.

Decision architecture. Which decisions should humans make, which should agents make, and which require both? Most organizations haven't even asked this question formally. The leaders seeing results have mapped their key decision flows and deliberately redesigned them with AI agents at specific points — not replacing human judgment, but augmenting it with faster data processing and pattern recognition.

Role design. Traditional job descriptions list tasks. AI-era role design starts with outcomes and then allocates tasks between humans and agents based on where each adds the most value. This is the practical expression of what we call building AI-ready organizations — designing work around human-AI collaboration rather than retrofitting AI into human-only workflows.

Governance frameworks. When agents make decisions or take actions, who's accountable? When an AI agent processes customer data incorrectly, who owns the outcome? As we've detailed in our work on AI governance, these questions can't be answered retroactively. The organizations that wait for an AI mistake to force governance development pay a much higher price — in customer trust, regulatory scrutiny, and internal confidence — than those that build the framework proactively.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

The People Investment That Separates Winners from Losers

This is where the data becomes definitive.

BCG's research across hundreds of organizations found that the "future-built" companies — the ones generating 3.6x total shareholder returns — don't just invest more in AI technology. They invest radically more in the humans working alongside it.

The gap is dramatic:

- Future-built companies plan to upskill 50% or more of their employees. Laggards plan to upskill about 20%.

- Future-built companies allocate up to 60% of their AI budgets to upskilling and workforce development. The average organization allocates 27%.

- And the finding that should reshape every AI budget conversation: 70% of AI value comes from rethinking the people component, not the technology.

Read that last number again. Seven out of ten dollars of AI value don't come from better algorithms, bigger models, or more sophisticated tools. They come from how your people work with those tools, how your organization is structured around them, and how your culture supports the transition.

This is precisely why most AI projects fail — not from technical shortfalls, but from human factors that never get the investment they require. The technology works. The people strategy doesn't exist.

The CHROs at future-built companies have become central strategic players — what BCG calls the "reinvention of the CHRO." They're not running traditional HR functions. They're redesigning the relationship between humans and AI across the entire organization. They're building learning cultures where experimentation is rewarded, where AI fluency is developed at every level, and where the fear that naturally accompanies this much change is acknowledged and addressed rather than ignored.

If your organization is spending 80% of its AI budget on tools and 20% on people, the research is unambiguous: you have the ratio inverted.

What This Looks Like on Monday Morning

Theory is useful. Specifics are what change behavior. Here's what the executives in the 5% are actually doing differently in their daily and weekly work.

They start their day with AI. Before their first meeting, they're using AI agents to synthesize overnight developments in their industry, flag anomalies in business metrics, and pre-analyze the agenda for their first meeting. This isn't about saving 20 minutes. It's about showing up to every conversation with better preparation than was previously possible.

They pressure-test decisions with adversarial agents. Brightstar Capital's "Red Team Agent" model is spreading. Leaders are using AI to challenge their own conclusions before presenting them to boards or teams. What assumption is this strategy based on? What would have to be true for this to fail? What data contradicts this direction? Having an AI agent that's designed to push back — without organizational politics, without career risk, without ego — produces better decisions.

They've redesigned their meeting rhythms. When AI agents can summarize performance data, identify trends, and flag exceptions, the purpose of leadership meetings shifts. You don't need 90 minutes to review what happened. You need 30 minutes to decide what to do about it. The executives seeing results have cut their status-update meetings dramatically and replaced them with shorter, decision-focused sessions where AI has already done the analytical preparation.

They're building AI fluency visibly. They talk openly about what they're experimenting with, what's working, what's failed. They share their AI workflows with their teams. They create psychological safety around AI adoption by demonstrating that even at the executive level, learning is ongoing and imperfect. This works with human nature, not against it — people adopt what their leaders authentically model.

They've established clear decision rights for agents. Not everything needs executive approval, and not everything should be delegated to AI. The practical framework looks like this: AI agents handle data gathering, pattern recognition, and initial recommendations autonomously. Humans retain judgment calls that involve values, relationships, reputational risk, and novel situations. The boundary is explicit, documented, and revisited quarterly as capabilities evolve.

They invest in the "last mile" of implementation. The hardest part of AI adoption isn't getting the technology to work. It's getting 200 people to change how they work. The executives in the 5% spend disproportionate time on change management — running workshops, removing obstacles to adoption, celebrating early wins, and addressing resistance with empathy rather than mandates. They understand that your people are the heroes of this transformation, not obstacles to it.

The Leadership Qualities AI Cannot Replace

Here's where this story turns from challenging to genuinely hopeful.

McKinsey's research on generative AI and the future of work identifies the human capabilities that remain irreplaceable — and every one of them is a leadership quality.

Aspiration. The ability to envision a future that doesn't yet exist and inspire others to build it. AI can optimize toward a defined objective. It cannot dream a new one into existence. The leader who can articulate a compelling vision of what their organization becomes — not despite AI, but because of it — holds a capability no model can replicate.

Judgment. Not analysis — AI handles analysis. Judgment is the ability to weigh competing priorities, navigate ambiguity, and make calls when the data is incomplete or contradictory. It's knowing when the numbers say one thing but the situation demands another. This is the domain where decades of human experience become more valuable, not less, because you're applying judgment to a richer, faster stream of AI-generated insight.

Empathy. The capacity to understand what your people are feeling — their excitement, their fear, their confusion — and respond in ways that build trust. AI can simulate empathy. It cannot feel it. In a period of unprecedented workplace change, the leaders who create genuine psychological safety will retain their best people while competitors lose theirs.

Wisdom. The integration of knowledge, experience, and ethical reasoning that produces decisions people trust. Wisdom is what tells you which AI recommendation to follow and which to override. It's the hard-won understanding that some things that can be optimized shouldn't be — that efficiency and humanity sometimes pull in different directions, and the wise leader knows when to choose humanity.

Creativity. Not content generation — AI handles that capably. Creativity in the leadership context is the ability to see connections between seemingly unrelated domains, to reframe problems in ways that reveal new solutions, to imagine business models and organizational structures that have never existed before. AI amplifies human creativity; it doesn't replace it. The leader who combines creative vision with AI-powered execution becomes exponentially more capable than either human or machine alone.

McKinsey estimates that up to 30% of current US work hours could be automated by 2030. That's a staggering shift. But the work that remains — and grows in importance — is precisely the work that demands these human leadership qualities.

The age of AI doesn't diminish the need for exceptional leadership. It amplifies it. The organizations that thrive will be led by people who possess the qualities no algorithm can replicate — and who use AI to extend those qualities further than was previously imaginable.

The Humans First approach isn't sentiment. It's strategy. The most human leaders will be the most valuable leaders of this era — not because technology doesn't matter, but because technology without human judgment, empathy, and wisdom is just sophisticated automation. And sophisticated automation, without the leaders who can direct it wisely, is a very expensive way to go nowhere.

Frequently Asked Questions

How do I start transforming my leadership approach for AI?

Start with your own workflows. Before asking your organization to change, spend two weeks integrating AI tools into your daily decision-making — meeting preparation, strategic analysis, competitive research. The executives seeing the best results are personally fluent in AI tools, which gives them both the credibility and the practical understanding to lead transformation effectively.

Why are so few companies seeing real AI returns?

BCG's research shows only 5% of organizations have achieved substantial financial gains from AI because the vast majority treat it as a technology project rather than an organizational transformation. Seventy percent of AI value comes from the people component — upskilling, role redesign, workflow restructuring, and cultural change — but most budgets allocate less than 30% to these areas.

What leadership skills matter most in the AI era?

McKinsey identifies five irreplaceable human capabilities: aspiration (visioning a new future), judgment (navigating ambiguity), empathy (building trust during change), wisdom (ethical decision-making), and creativity (reimagining business models). These become more valuable, not less, as AI handles analytical and operational tasks.

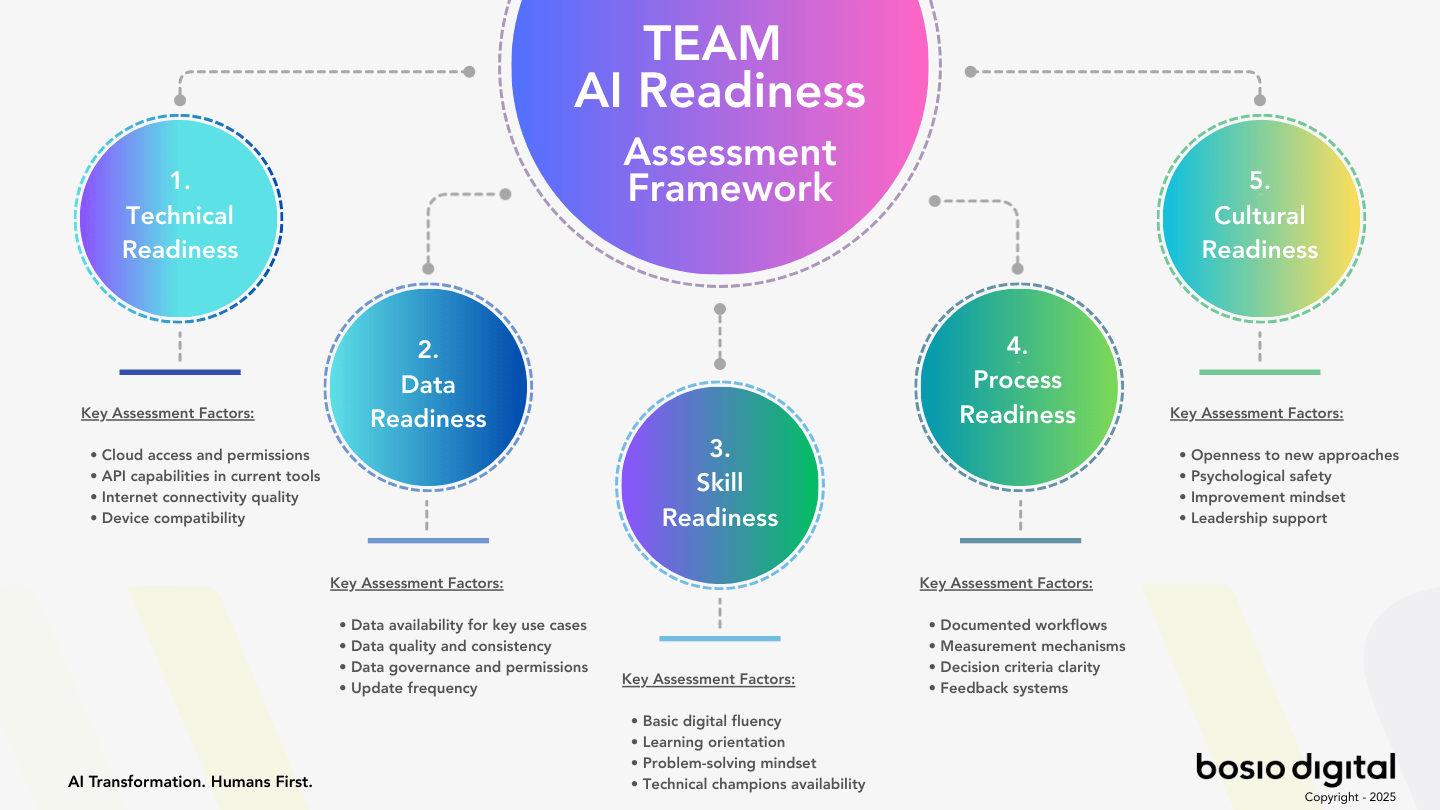

How should I restructure my team for human-AI collaboration?

Start by mapping your key decision flows. Identify which decisions benefit from AI's speed and data processing, which require human judgment, and which need both. Then redesign roles around outcomes rather than tasks — allocating work between humans and AI agents based on where each adds the most value.

What percentage of my AI budget should go to people and upskilling?

BCG's data is clear: future-built companies that outperform by 3.6x in total shareholder returns allocate up to 60% of their AI budgets to workforce development and upskilling, compared to just 27% for average organizations. If your budget is technology-heavy, the research suggests rebalancing toward people.

How will AI agents change middle management?

Among organizations leading in AI agent deployment, 45% expect a reduction in middle management layers. The coordination, information-routing, and status-reporting functions that define many middle management roles are precisely what AI agents handle well. Middle managers who thrive will shift toward coaching, complex judgment, cross-functional problem-solving, and human leadership — roles AI cannot fill.

How do I build AI governance without slowing down adoption?

Establish decision rights upfront: define what AI agents can do autonomously, what requires human review, and what remains exclusively human. Keep the framework lightweight and revisit it quarterly as capabilities evolve. The organizations that build governance proactively spend less time on it overall — and avoid the costly trust breakdowns that come from uncontrolled AI mistakes.

Sources

- BCG, "As AI Investments Surge, CEOs Take the Lead" (January 2026)

- BCG, "AI Transformation Is a Workforce Transformation" (2026)

- BCG, "AI Leaders Outpace Laggards in Revenue Growth, Cost Savings" (September 2025)

- BCG/MIT Sloan, "Agentic AI Blurs Line Between Tool and Teammate" (November 2025)

- MIT Sloan Management Review, "The Emerging Agentic Enterprise: How Leaders Must Navigate a New Age of AI" (2026)

- The Conference Board, "AI and the C-Suite: Implications for CEO Strategy in 2026"

- McKinsey Global Institute, "Generative AI and the Future of Work in America"

- TCS, "Leadership Is Changing in the Age of AI: Lessons from Davos 2026"

- World Economic Forum, "AI Roadmap for Transforming Business" (January 2026)

- BCG, "Reinvention of the CHRO in an AI-Driven Enterprise" (2026)

- Harvard Business Review, "How Executives Are Thinking About AI Heading Into 2026"