Your Marketing Team Is Probably Using AI Wrong

Most marketing teams are using AI like a fancy search engine. They open ChatGPT when they're stuck, type a question, get a mediocre answer, sigh, and go back to doing things the old way.

That's not AI adoption. That's AI dabbling.

The teams actually getting value from AI aren't using it for one-off questions. They've built AI into their recurring workflows—the work that follows a pattern and eats up hours every week. Email sequences. Content drafts. Performance reports. The repetitive stuff that's necessary but not where you want to spend your best thinking.

The difference isn't about being more tech-savvy. It's about being more intentional. These teams asked a different question. Instead of "how can AI help me right now?" they asked "what do we do every week that AI could do 80% of, with us handling the last 20%?"

This article walks through five workflows where that 80/20 split works well. To make this concrete, we'll follow a single scenario throughout: imagine your team is preparing to launch a new product feature next month. You need emails, content, reporting—the usual. Here's how AI fits into each piece, and where your judgment stays essential.

Workflow 1: Prompting That Gets Usable Outputs

Before you can build AI into any workflow, your team needs to get consistently good outputs. Most people don't. And the gap between people who get useful results and people who get generic mush usually comes down to one thing: how they ask.

Consider the difference between asking a new contractor to "design something nice" versus giving them a detailed brief with examples, constraints, and context about your audience. Same contractor, dramatically different output. AI works the same way.

Here's a prompt most people would write for our product launch scenario:

"Write an email announcing our new feature."

And here's what that prompt is actually saying to the AI: figure out what the feature is, guess who the audience might be, assume what tone we'd want, decide how long it should be, and make up what we'd want them to do after reading it. That's a lot of guessing. No wonder the output needs heavy editing.

A prompt that actually works gives the AI what it needs to succeed: who it should write as, who it's writing for, what specifically you need, how it should be structured, and what good looks like. For our launch email, that might sound like this:

"You're a B2B email copywriter for a manufacturing software company. Write a launch announcement for our new inventory forecasting feature. The audience is operations managers at mid-size manufacturers who are already customers—they know us, so skip the company intro. Tone should be professional but warm, not corporate or salesy. Keep it to 150 words max with one clear call-to-action to join a live demo. Here's an example of an email that worked well for our last feature launch: [paste example]."

This takes maybe 90 seconds longer to write. It saves 20 minutes of editing. More importantly, it means anyone on your team can get quality outputs—not just whoever happens to be "good at AI."

The teams that get consistent results have built a shared library of prompts for their recurring tasks. Not a formal system—just a shared doc where someone writes "here's the prompt that works for product launch emails" and everyone uses it. The prompt becomes institutional knowledge rather than individual skill.

Workflow 2: Context Building

Here's the real reason most AI outputs feel generic: the AI doesn't know anything about your business.

Every time you start a fresh conversation, you're talking to an AI with amnesia. It doesn't know your brand voice. It doesn't know your customers' actual problems. It doesn't know what makes your product different from competitors. So it writes content that could be for anyone—because as far as it knows, it is.

Context building is the fix. You create reference documents that capture your business specifics, then feed them to AI at the start of relevant tasks. The outputs go from "sounds like AI" to "sounds like us."

For most marketing teams, four documents cover the essentials. A brand voice document that captures how you sound—not abstract adjectives like "professional yet friendly," but actual examples of sentences that sound like you and sentences that don't. An audience profile that goes beyond demographics into how your customers describe their problems, what objections they raise, and what finally makes them say yes. A product overview in plain language that explains what you actually do and why it matters, not marketing fluff. And a current campaign document that captures what you're promoting right now, what the key messages are, and what offers are in market.

These don't need to be elaborate. A brand voice document might be one page with five examples of "this sounds like us" and five examples of "this doesn't." An audience profile might be a few paragraphs describing your typical buyer. The value comes from having it written down and ready to paste.

For our product launch, this changes everything. Instead of asking AI to write an announcement with no context, you paste your brand voice guide and your audience profile first. The AI now knows that your operations manager audience cares about reducing emergency orders, that they're skeptical of "AI-powered" claims because they've been burned before, and that your voice is direct and practical rather than hype-driven. The draft it produces will still need editing, but it'll be editing for refinement rather than editing for relevance.

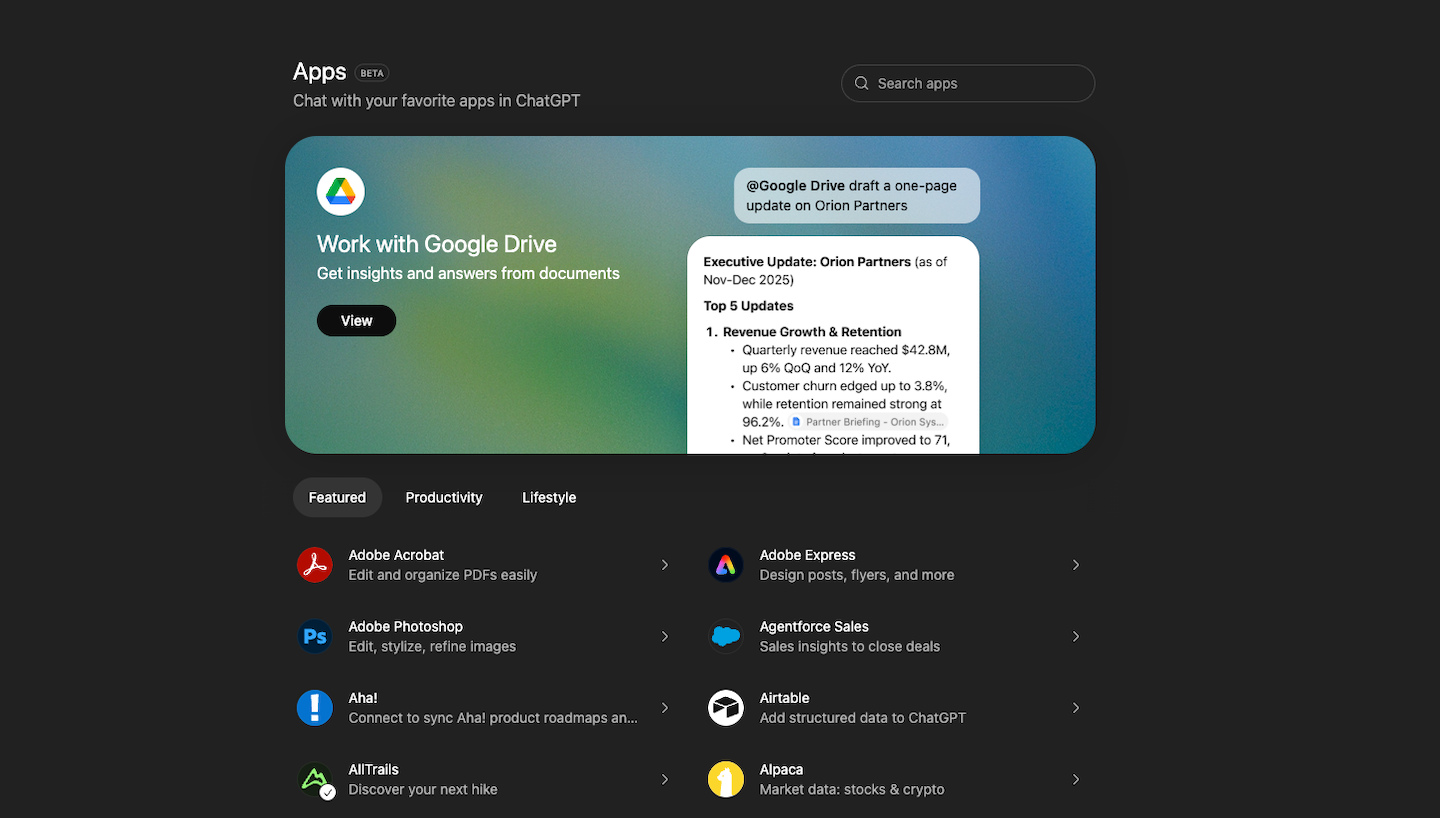

Some tools make this easier—Claude's Projects feature lets you upload context documents that persist across conversations, and ChatGPT lets you build real AI competence with context baked in. But even a simple shared folder with context documents you copy-paste works fine. The important thing is having the context written down and using it consistently.

Workflow 3: Email Automation with Human-in-the-Loop

Email is where AI delivers the fastest return for most marketing teams. The work is repetitive, follows patterns, and the cost of imperfection is low—you're going to review it before sending anyway. The question is just how much of the work AI does versus how much you do.

The answer isn't "AI does everything" or "AI does the first draft." It's more specific than that. AI handles the parts that follow patterns. Humans handle the parts that require judgment about people, timing, and strategy.

For our product launch, let's say you need a five-email nurture sequence for customers who haven't upgraded after the feature goes live. The old way: open a blank document, stare at it, write email one, write email two, realize email two should actually be email four, restructure everything, finally finish after a day of scattered work.

The AI way: you define the sequence structure and what each email should accomplish. Email one checks in and offers help getting started. Email two shares a quick win someone achieved with the feature. Email three addresses the most common objection you've heard from sales. Email four shares a customer story. Email five makes a time-limited offer. You give AI your context documents, explain the arc of the sequence, and ask it to draft all five.

What you get back won't be perfect. Some emails will be too long. Some will miss the mark on voice. The customer story email will need an actual customer story. But you're now editing and improving rather than creating from scratch. That's a different kind of work—faster and, honestly, more interesting. You're making judgments about what resonates rather than staring at a blank page.

The human-in-the-loop checkpoints matter here. AI drafts the sequence; you decide if the strategy makes sense. AI writes subject lines; you pick which ones to test. AI suggests send timing; you adjust based on what you know about this audience's inbox behavior. AI handles volume; you handle judgment.

The same pattern works for weekly newsletters, customer response templates, and re-engagement campaigns. Anywhere you're writing similar emails to similar situations repeatedly, AI can draft and you can decide.

Workflow 4: Content Production Pipeline

Most marketing teams use AI for content in the least efficient way possible. They ask AI to generate a complete draft from scratch, get something mediocre, and then heavily edit it. That's working against AI's strengths instead of with them.

AI is good at research, structuring, and first-pass drafting when given clear direction. Humans are good at strategic angle, voice refinement, and the specific insights that come from actually knowing your business. The teams getting value from AI for content have built pipelines that use each for what they're good at.

For our product launch, you probably need a blog post explaining the new feature. Here's how a pipeline approach would work.

You start by using AI to explore the topic—not to write, just to think. What are the common angles on inventory forecasting content? What questions does your audience typically have about this kind of feature? What's the conventional wisdom, and what might your feature challenge about it? This isn't your outline; it's input for your thinking. You spend 15 minutes with AI exploring possibilities, then you decide the angle. Maybe you land on "why most inventory forecasting fails in the first 90 days"—a problem-focused angle that positions your feature as the solution without being a product brochure.

Now you ask AI to suggest structures for that angle. Not one outline—three different ways to organize the argument. A chronological structure that walks through the 90-day failure pattern. A myth-busting structure that tackles misconceptions one by one. A case-study structure built around a specific customer scenario. You pick the one that feels right, or combine elements from two of them. Now you have your outline, and you made the strategic choice.

For the actual drafting, you work section by section rather than asking for a complete article. You give AI the context—overall angle, what came before, what comes after—and ask for a draft of that section hitting specific points. The AI is filling in your structure with your direction, not making up its own.

After the sections are drafted, you assemble and do a voice pass. This is where you fix transitions, add your actual perspective and unique insights, cut anything that sounds like it could be from anyone, and make it sound like you wrote it. AI got you 70% of the way there in a fraction of the time; you're spending your energy on the 30% that actually differentiates the content.

The math changes significantly. A 2,000-word article that used to take 6-8 hours—much of it stuck at a blank page—now takes 3-4 hours of active work. And because you're making decisions throughout rather than creating from nothing, the work is less draining.

Workflow 5: Marketing Reporting and Analysis

This is the most underused AI workflow for marketing teams, which is strange because it might be the most valuable. You're already sitting on more data than you can make sense of—campaign performance, website analytics, email metrics, social engagement. The problem isn't having data; it's having time to turn data into insight.

AI can help with the translation layer: taking raw numbers and turning them into plain-language summaries, patterns, and questions worth investigating.

Let's say your product launch has been live for two weeks and you need to report on how it's going. The old way: pull numbers from your email platform, your analytics tool, your CRM. Put them in a spreadsheet. Stare at them trying to figure out what story they tell. Write a summary. Wonder if you missed something. Spend two hours, end up with a document that says "things went okay."

The AI way: you export the same data, but instead of staring at it yourself, you paste it into AI and ask for help making sense of it. "Here's our performance data for the first two weeks of the product launch. Compare email engagement to our baseline campaigns. Look at the conversion path from announcement email to demo request to upgrade. Tell me the three most important things this data shows and one thing that seems off and worth investigating."

What you get back isn't the final report—it's a starting draft of interpretation. AI will spot that your demo-to-upgrade conversion is lower than expected and suggest possible explanations. Some of those explanations will be wrong because AI doesn't know that your sales team was at a conference last week. But now you're adding context to an interpretation rather than building an interpretation from scratch.

This works for weekly performance summaries, campaign post-mortems, and anomaly investigation. When something looks weird in your metrics—say, email open rates dropped 6 points over three weeks—AI can help you structure the investigation. It won't know the answer, but it can help you generate hypotheses and think through what data would confirm or rule out each one.

The human judgment piece here is knowing what questions matter and what context the data can't show. AI sees the numbers. You know that the campaign launched during a holiday weekend, that your main competitor announced a similar feature, that the sales team changed their follow-up process mid-month. You're combining AI's ability to process and summarize with your ability to interpret what it means for your specific business.

Where to Start

You don't need to implement all five workflows at once. Pick based on where you're currently losing the most time—or where imperfect AI outputs would have the lowest cost.

If your team is getting inconsistent results from AI and people are frustrated that it "doesn't work," start with prompting and context building. These are foundational. The investment is a few hours to create shared prompts and context documents; the payoff is better outputs from everything else you try.

If email is eating significant hours every week—newsletters, sequences, customer responses—start there. Email has a forgiving feedback loop. A draft that's 80% right is easy to fix, and you'll see time savings immediately.

If content is your bottleneck, the pipeline approach is worth building. It requires more setup than the others—you're creating a new way of working, not just using AI for a task—but the time savings compound over months.

If you're drowning in data and starving for insights, try the reporting workflow on your next weekly summary. Export your numbers, paste them into AI, ask for a plain-language interpretation. See if that 20-minute experiment gives you something useful.

The teams getting real value from AI didn't transform overnight. They picked one workflow, made it real, and built from there. Six months later, they'd compounded small improvements into a genuinely different way of working.

Your competitors are still dabbling. They open AI when they're stuck, get disappointed, and go back to the old way. You can do something different. Pick one workflow, make it part of how your team actually operates, and build from there.