Five questions every organization needs to answer—whether you have 50 employees or 5,000

Nobody wants to read about governance.

I don't blame you. The word itself sounds like something that belongs in a compliance binder—the kind of document that gets written once, filed somewhere, and never touched again. Governance conjures images of legal reviews, committee meetings, and policies that exist primarily to cover someone's backside.

So let's get the awkward truth out of the way: AI governance is not exciting. It will never trend on LinkedIn. No one will give a TED talk about it that goes viral.

But here's what's also true: the absence of AI governance is quietly becoming one of the most expensive problems in business.

And not for the reasons you might think.

The Three Problems No One's Talking About

When most people hear "AI governance," they assume it's about compliance—staying on the right side of regulations, avoiding lawsuits, checking boxes. That's part of it. But the real cost of missing governance shows up in three places:

Risk You Can't See

Your employees are already using AI. The question is whether you know how, where, and with what data.

A December 2025 survey of over 600 IT leaders found that 61 percent of organizations discovered unauthorized AI tools in their environments—but only 26 percent have any way to monitor usage. Nearly 70 percent lack a formal system to track AI adoption at all. And when asked how effective their shadow AI risk management is? Only 13 percent said "highly effective."

This isn't about employees being reckless. They're trying to work faster, meet deadlines, and solve problems. But without clear guidelines, every individual makes their own judgment calls about what's acceptable. Some of those calls will be fine. Some won't. You just don't know which is which.

The data exposure is real and accelerating. Research from January 2026 found that the average company now experiences 223 incidents per month of users sending sensitive data to AI apps—double the previous year. Between 2023 and 2024, corporate data pasted or uploaded into AI tools rose by 485 percent.

If you want to understand the full scope of what happens when employees use personal AI accounts for work—the intellectual property exposure, compliance violations, and strategic risks—I've written a detailed examination of the hidden liability of personal AI accounts in business. The short version: every conversation with a personal AI account is a potential data leak you can't monitor, can't audit, and can't control.

Quality You Can't Trust

AI can produce impressive outputs in seconds. It can also hallucinate facts, invent citations, and generate content that sounds authoritative but is completely wrong.

When an employee uses AI to draft a client proposal, summarize a legal document, or analyze financial data—who verifies the output before it goes out the door?

Without governance, the answer is often "no one" or "it depends on who's doing the work." That inconsistency is a liability waiting to surface.

Air Canada learned this when a court ruled they were responsible for incorrect information their AI chatbot gave a customer—even though no human had approved what it said. The tribunal rejected Air Canada's argument that the chatbot was a "separate legal entity" and held the company accountable for the AI's erroneous advice.

The AI Incident Database documents $2.9 billion in settlements and fines related to AI failures since 2020, and the trend is accelerating. These aren't theoretical risks—they're documented business losses from organizations that deployed AI without adequate oversight.

More recently, Deloitte faced scrutiny when AI-generated content in professional deliverables was discovered to contain errors that proper quality controls should have caught. The incident exposed critical gaps in enterprise quality controls when AI is involved in professional work.

The quality problem compounds because AI confidence doesn't correlate with AI accuracy. A model will state fabricated information with the same assured tone it uses for verified facts. Without governance structures that mandate human verification for consequential outputs, organizations are essentially trusting algorithms to know their own limitations—which they demonstrably don't.

Opportunity You Can't Capture

This is the problem that gets overlooked. Missing governance doesn't just create risk—it slows you down.

When employees don't know what's allowed, they hesitate. When leadership can't articulate how AI should be used, teams either avoid it entirely or adopt it inconsistently. When there's no shared understanding of quality standards, people duplicate effort second-guessing each other's AI-assisted work.

The research tells the story: 72 percent of companies are now using AI in at least one function, but only about 1 percent have successfully scaled AI beyond pilot phases. The gap isn't usually technology. It's organizational clarity.

A 2024 Gartner survey found that while 80 percent of large organizations claim to have AI governance initiatives, fewer than half can demonstrate measurable maturity. Most lack a structured way to connect policies with practice.

Here's the counterintuitive truth: good governance doesn't slow AI adoption. It accelerates it—by creating the boundaries that let people move with confidence.

When employees know exactly what tools are approved, what data they can use, and what verification is required, they stop hesitating. When teams have shared standards for AI-assisted work, they stop duplicating effort. When leadership has clear accountability structures, they can greenlight initiatives faster because they understand the risk parameters.

The organizations seeing real value from AI aren't the ones moving fastest with the least oversight. They're the ones who built clarity first.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

What "Governance" Actually Means (Without the Jargon)

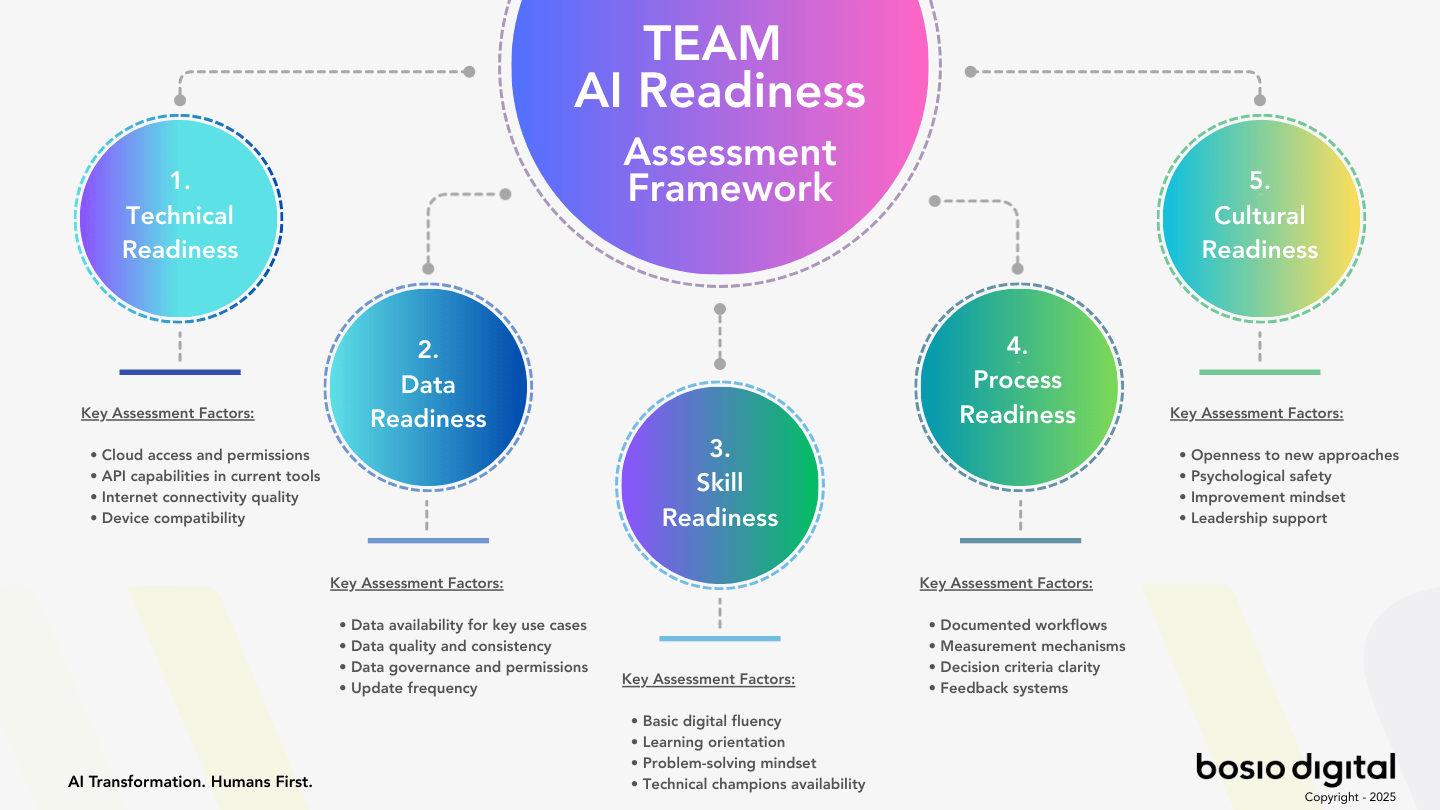

Here's the thing: strip away the corporate language and AI governance is actually just your organization's answers to five questions.

1. Who can use what AI tools for what purposes?

Which tools are approved? Which are prohibited? Are there different rules for different roles or data types? Can marketing use generative AI for content creation? Can finance use it for analysis? Can HR use it for candidate screening?

2. What data can go in, and what's off-limits?

Can employees paste customer information into AI tools? What about financial data? Proprietary strategies? Personnel records? Where's the line between acceptable and prohibited, and how do people know where that line is?

3. Who's accountable when something goes wrong?

If an AI-generated client deliverable contains errors, who owns that? If sensitive data ends up in an unauthorized AI tool, who's responsible? If an AI system makes a biased recommendation that leads to a discrimination claim, who answers for it?

4. How do we verify quality before AI outputs reach clients or inform decisions?

What review processes exist for AI-assisted work? Are there different standards for internal versus external outputs? Who's qualified to verify AI outputs in different domains? What documentation is required?

5. How do we actually get people to follow these guidelines?

This is the question most governance frameworks skip—and it's the one that determines whether everything else matters. You can write perfect policies, but if employees don't follow them, you have theater instead of governance.

That's it. If you have clear answers to these five questions—and people actually follow them—you have AI governance. If you don't, you have some combination of hidden risk, unreliable quality, and unrealized potential.

The word "governance" makes this sound complicated. It isn't. What's complicated is operating without it—and not knowing where the problems are until they surface.

Why This Applies Whether You Have 50 Employees or 5,000

There's a misconception that governance is an enterprise problem. That small and mid-sized businesses can move fast and worry about structure later.

The opposite is true.

Large enterprises have dedicated compliance teams, legal departments, and the resources to absorb mistakes. A mid-market company or a lean team doesn't have that buffer. One data exposure incident, one client deliverable with fabricated information, one regulatory misstep—the impact is proportionally much larger.

The statistics bear this out. In the EU, 41.2 percent of large enterprises used AI in 2024, versus only 11.2 percent of small and 21.0 percent of medium-sized firms. But adoption is accelerating rapidly across all size segments—a US survey found 55 percent of small businesses used AI in 2025, up from 39 percent in 2024. Usage was highest at 68 percent among firms with 10 to 100 employees.

What this means: smaller organizations are adopting AI at rates approaching larger enterprises, but without the governance infrastructure that larger organizations are building. The governance gap is widest precisely where the consequences of getting it wrong are most severe.

But here's what smaller organizations do have: the ability to move quickly, establish norms before bad habits form, and build governance into culture rather than bolting it on after the fact.

Research shows that SMBs can apply the same governance principles as large enterprises at smaller scale—creating what some call a "mini-studio" with a small team responsible for AI adoption, oversight, and scaling. For many SMBs, this doesn't require new hires; it requires clarity about who owns these questions and time allocated to answer them.

The five questions are the same regardless of company size. The answers scale up or down depending on your context. A 50-person company doesn't need a governance committee and a 30-page policy document. But it does need clear answers that everyone understands and follows.

The Regulatory Landscape Is Shifting Faster Than You Think

Even if you're not motivated by internal risk management, the external regulatory environment is forcing the conversation.

The EU AI Act became enforceable in phases starting August 2024, introducing risk stratification, conformity evaluations, and compliance requirements for AI systems in high-impact areas including healthcare, employment, and financial services. Organizations operating in or selling to EU markets face potential fines reaching €35 million or 7 percent of global revenue.

In the United States, the regulatory landscape has shifted dramatically. The January 2025 executive order "Removing Barriers to American Leadership in Artificial Intelligence" revoked the previous administration's AI safety framework—but this didn't eliminate compliance obligations. It shifted them entirely to state level.

The result is a patchwork of state requirements. Colorado's AI Act requires impact assessments for high-risk AI systems. California has passed comprehensive AI legislation. Illinois, Texas, and other states have introduced their own requirements. For businesses operating across state lines, the complexity has increased rather than decreased.

By 2025, nearly 72 percent of S&P 500 companies flagged AI as a material risk in their disclosures, up from just 12 percent two years prior. This isn't paranoia—it's recognition that AI governance has become a board-level concern.

Fortune 100 companies citing AI risk as part of board oversight responsibilities tripled in one year, from 16 percent to 48 percent. But here's the gap: while 62 percent of boards now hold regular AI discussions, only 27 percent have formally added AI governance to their committee charters.

The direction is clear even if the specifics vary by jurisdiction: organizations will increasingly be expected to demonstrate how they govern AI use. Those without frameworks will find themselves scrambling to build them under regulatory pressure rather than strategic choice.

Subscribe to our AI Briefing!

AI Insights That Drive Results

Join 500+ leaders getting actionable AI strategies

twice a month. No hype, just what works.

Why Policies Without Culture Fail

Here's where most governance conversations go wrong: they focus entirely on what policies to write and skip entirely over how to make those policies stick.

The 2024 IAPP Governance Survey found that only 28 percent of organizations have formally defined oversight roles for AI governance. Most companies still distribute AI governance tasks across compliance, IT, and legal teams without a unified structure. The result: everyone assumes someone else is handling it.

But the deeper problem isn't organizational structure—it's culture.

IBM's 2025 Cost of a Data Breach report revealed that 63 percent of breached organizations either don't have an AI governance policy or are still developing one. Of those that do have policies, only 34 percent perform regular audits for unsanctioned AI.

One in five organizations reported a breach due to shadow AI. Only 37 percent have policies to detect it. Organizations with high shadow AI usage observed $670,000 higher breach costs on average.

The pattern is clear: policies exist on paper, but behavior continues unchanged.

This isn't because employees are defiant. It's because prohibition-only approaches don't work when the prohibited tools are genuinely useful and freely available.

Research consistently shows that banning AI tools simply drives usage underground. When employees face pressure to produce and official channels are slow, unclear, or nonexistent, they'll use whatever tools help them succeed. The tools exist. They're free. They're effective. Telling people not to use them without providing alternatives isn't governance—it's wishful thinking.

Effective governance requires understanding why people use unauthorized AI in the first place:

- Productivity pressure: Employees adopt AI to move faster when official processes are too slow

- Accessibility: Many AI tools require no setup, no approval, and deliver instant results

- Innovation culture: Organizations encourage experimentation but fail to provide boundaries

- Absence of alternatives: When companies don't provide sanctioned AI tools, employees find their own

The solution isn't stricter prohibitions. It's creating conditions where following governance is easier than circumventing it.

Building Governance That Actually Works

The most effective AI governance programs share common characteristics that distinguish them from policy-theater:

They Lead With Enablement, Not Restriction

The framing matters. "Here's how you can use AI safely and effectively" generates different behavior than "Here are all the ways you're prohibited from using AI."

Organizations that position governance as enabling rather than restricting see higher adoption of sanctioned tools and lower incidence of shadow AI. Employees aren't looking to circumvent rules—they're looking to do their jobs well. When governance helps them do that, compliance becomes natural rather than forced.

They Provide Sanctioned Alternatives

You cannot govern AI usage if you don't provide legitimate AI tools. Telling employees they can't use ChatGPT for work while offering no alternative isn't governance—it's denial.

Enterprise AI platforms exist across the capability spectrum:

- Microsoft 365 Copilot integrates directly into productivity tools most organizations already use, with enterprise data protection built in

- Google Workspace with Gemini offers similar integration for Google-centric organizations

- ChatGPT Enterprise and Team provide OpenAI's capabilities with business-appropriate controls and data handling

- Claude for Enterprise offers Anthropic's models with SSO, audit logs, and enterprise security features

For organizations with stricter requirements—highly regulated industries, sensitive intellectual property, or data sovereignty mandates—on-premises deployment of open-source models like Llama or Mistral provides maximum control at the cost of more technical complexity.

The specific platform matters less than the principle: employees need legitimate tools that are as convenient as personal accounts but designed for business use.

They Create Psychological Safety Around AI Use

One of the most important findings from shadow AI research: employees are often willing to disclose their AI usage if disclosure is treated as learning rather than punishment.

Heavy restrictions rarely solve innovation risk. In most organizations, prohibiting generative AI only drives its use underground, making oversight harder. The goal is not to suppress experimentation but to formalize it—creating guardrails that enable safe autonomy rather than blanket prohibition.

Organizations with the lowest shadow AI incidence share a common approach: they make it easy for employees to ask questions, request new tools, and report concerns without fear of punishment for past behavior.

This requires explicit communication from leadership that acknowledges AI use is happening, frames governance as protection rather than punishment, and creates clear channels for questions and tool requests.

They Build Verification Into Workflows

Governance isn't just about what tools people use—it's about what happens to AI outputs before they reach clients or inform decisions.

Effective organizations build verification checkpoints into their processes:

- Tiered review requirements based on output sensitivity (internal documents may need lighter review than client deliverables)

- Domain-specific verification (legal AI outputs reviewed by legal experts, financial outputs by finance experts)

- Documentation requirements that create audit trails for consequential AI-assisted decisions

- Clear escalation paths for uncertain cases

The Air Canada case illustrates what happens without these checkpoints: AI provided incorrect information to a customer, no human verified it, and the organization was held liable. Every AI touchpoint with customers, clients, or consequential decisions needs a verification protocol.

They Measure What Matters

You can't improve what you don't measure. Effective governance includes metrics that track both compliance and value:

Compliance metrics:

- Adoption rate of sanctioned AI tools

- Incidents of unauthorized AI usage detected

- Policy acknowledgment and training completion rates

- Audit findings and remediation timelines

Value metrics:

- Time savings from AI-assisted workflows

- Quality improvements in AI-augmented outputs

- Employee satisfaction with AI tools and governance

- Use cases emerging that warrant formalization

The measurement discipline serves two purposes: it identifies gaps in governance before they become incidents, and it demonstrates ROI that justifies continued investment in AI capabilities.

A Practical Framework: Governance By Company Size

The five questions apply universally, but implementation scales to context. Here's what governance looks like at different organizational sizes:

Teams and Departments (10-50 people)

Even without organizational policy, teams can establish their own governance:

Minimum viable governance:

- Written list of approved AI tools for team use

- Clear guidance on what data categories are off-limits

- Designated point person for AI questions and tool requests

- Lightweight review process for AI-assisted client deliverables

- Monthly team discussion of what's working and what isn't

Time investment: 2-4 hours to establish, 1-2 hours monthly to maintain

Reality check: If your organization doesn't have AI governance, your team can still have it. Don't wait for enterprise policy that may never come.

Small and Mid-Market Companies (50-500 people)

At this scale, governance needs more structure but can still be lightweight:

Core components:

- Formal AI acceptable use policy (1-3 pages, not 30)

- Approved tool list with brief rationale for each approval

- Data classification guide specific to AI use

- Role-based guidance (different rules for HR than for marketing)

- Incident response process (what happens when something goes wrong)

- Quarterly review and update cycle

Ownership: Designate a governance owner—this doesn't need to be a new hire. It can be IT, operations, or compliance leadership with AI governance added to their responsibilities and time allocated accordingly.

Training: Annual policy training plus onboarding integration for new employees. Doesn't need to be elaborate—30-60 minutes covering what tools are approved, what data is off-limits, and who to ask when uncertain.

Time investment: 20-40 hours to establish initially, 5-10 hours monthly ongoing

Larger Organizations (500+ people)

At enterprise scale, governance requires dedicated structure:

Organizational components:

- AI governance committee with cross-functional representation (legal, IT, compliance, operations, business units)

- Dedicated governance function or clear assignment of responsibilities across existing functions

- Formal tool evaluation and approval process with documented criteria

- Comprehensive policy framework covering use, data, quality, and accountability

- Regular audit program with documented findings and remediation tracking

- Integration with existing risk management and compliance programs

Technical controls:

- Single sign-on integration for approved AI tools

- Data loss prevention systems configured for AI-specific risks

- Usage monitoring and analytics across AI platforms

- Access controls aligned with data classification

- Audit logging for compliance and incident response

Training and enablement:

- Role-based training programs with different depth for different functions

- AI champion program to drive adoption and surface issues

- Center of excellence or shared services for AI best practices

- Regular communication cadence keeping governance current

Metrics and reporting:

- Dashboard tracking adoption, compliance, and value metrics

- Regular reporting to executive leadership and board

- Benchmarking against industry standards and regulatory requirements

Time investment: Significant ongoing investment, but scaled to risk exposure and AI ambitions

The Human Element: Why Culture Beats Policy

Everything I've described so far is necessary but not sufficient. You can have perfect policies, sophisticated tools, and comprehensive training—and still have governance failure if the culture doesn't support it.

This is where my work in organizational change intersects with AI governance. After 25 years in digital transformation and an equal amount of time in mindfulness practice and human development work, I've learned that sustainable change requires addressing both the structural and the human dimensions.

AI governance succeeds or fails based on human factors:

Fear versus trust. When employees fear punishment for past AI use, they hide current AI use. When they trust that governance exists to help them, they become allies in making it work. The difference is primarily communication and leadership tone.

Clarity versus confusion. When policies are clear, specific, and accessible, people follow them. When policies are vague, buried in documentation no one reads, or constantly changing, people make their own rules.

Enablement versus restriction. When governance provides better tools and clearer paths, people embrace it. When governance only says "no" without offering alternatives, people circumvent it.

Accountability versus blame. When something goes wrong, organizations that focus on learning and improvement build stronger governance. Organizations that focus on finding someone to blame drive problems underground.

The pattern I see consistently: organizations that approach AI governance as a cultural challenge rather than purely a policy challenge achieve better outcomes with less friction.

This doesn't mean ignoring the technical and procedural dimensions—those matter enormously. It means recognizing that policies are implemented by humans, and humans respond to culture more than they respond to documentation.

Getting Started: First Steps This Week

If your organization doesn't have AI governance—or has governance that exists on paper but not in practice—here are concrete first steps:

This week:

- Acknowledge the current state. Survey your team or organization (even informally) about AI tool usage. You can't govern what you don't understand.

- Answer the five questions for yourself. Even if you can't set organizational policy, get clear on your own answers. What tools are you using? What data do you put in them? Who would you tell if something went wrong?

- Identify the obvious gaps. Based on what you learn, what are the biggest risks? Unauthorized tools? Sensitive data? No quality verification? Regulatory exposure?

This month:

- Draft a minimum viable policy. Start with one page answering the five questions for your context. Perfect is the enemy of good—a simple policy followed is better than a comprehensive policy ignored.

- Designate ownership. Someone needs to be responsible for AI governance. Even if it's a partial assignment added to existing responsibilities, make it explicit.

- Communicate the change. Tell your team or organization that AI governance matters, why it matters, and what specifically is changing. Frame it as enablement.

This quarter:

- Evaluate sanctioned tools. If you're asking people not to use personal accounts, provide enterprise alternatives. Evaluate options, make decisions, and deploy.

- Build verification into workflows. Identify where AI outputs need human review before going to clients or informing decisions. Establish those checkpoints.

- Measure baseline and progress. Track adoption of sanctioned tools, awareness of policies, and any incidents. You can't improve what you don't measure.

The Uncomfortable Truth

Here's what I've observed working with organizations on AI transformation: the companies that succeed with AI aren't the ones that move fastest with the least governance. They're the ones that build clarity before they build capability.

Governance feels like it slows things down. In the short term, it does—you can't just tell everyone to use whatever AI tools they want and figure it out later if you want governance to work. But in the medium and long term, governance accelerates everything else.

- Teams move faster when they know what's allowed

- Quality improves when verification is systematic rather than ad hoc

- Risk decreases when you can see and manage AI usage rather than hoping for the best

- Adoption increases when employees trust that using AI won't get them in trouble

The organizations that skip governance in favor of speed almost always pay for it later—in incidents, in remediation, in rebuilding trust, in regulatory response. The cost of building governance upfront is a fraction of the cost of building it after something goes wrong.

AI governance is genuinely unsexy. It will never be the exciting part of AI transformation. But it's increasingly the difference between AI transformation that creates sustainable value and AI adoption that creates hidden liability.

The question isn't whether to address governance. The question is whether to address it now, strategically and on your terms—or later, reactively and under pressure.

I know which one I'd choose.

Sascha Bosio is co-founder of bosio.digital, an AI transformation consultancy that helps mid-market companies adopt AI through a "Humans First" philosophy. With 25+ years in digital transformation and equal experience in mindfulness practice and human development, Sascha brings a unique perspective to the organizational challenges of AI adoption. For more on building AI-ready organizations, visit bosio.digital.

Related Reading

- The Hidden Liability of Personal AI Accounts in Business — A deep dive into the specific risks of shadow AI through personal accounts

- The Blueprint for AI-Ready Organizations — What separates the 5% of AI initiatives that succeed from the 95% that stall

AI Transformation. Humans First. The Manifesto — The philosophy behind human-centered AI adoption